Unravel on Databricks SaaS Setup Guide

This page provides step-by-step instructions for integrating Unravel with Databricks SaaS.

Databricks Components

Service Principal Creation

A service principal (SPN) is required to authenticate Unravel to the Databricks API. This non-human identity enables secure, automated access for monitoring and data collection, following best practices for automation and security. Service principals provide a secure way for Unravel to interact programmatically with Databricks APIs. This allows Unravel to monitor and optimize your Databricks environment without relying on individual user credentials.

To create and configure a service principal:

Create the service principal (SPN):

For AWS, follow the official AWS Databricks Documentation.

For Azure, follow the official Azure Databricks Documentation.

Create a personal access token (PAT) using the command line as a workspace admin.

For AWS, follow the official AWS Databricks Documentation.

For Azure, follow the official Azure Databricks Documentation.

Databricks - API Permissions

After creating the service principal, configure essential permissions to ensure Unravel operates with the minimum necessary privileges.

To configure API permissions:

Go to Settings > Workspace Admin in your Databricks workspace.

Under Identity and Access, locate the service principal and click Manage.

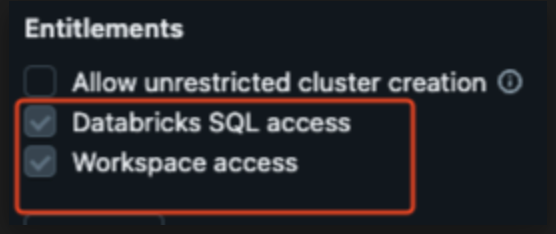

Under the Configuration tab for the SPN, ensure the following entitlements are enabled:

Databricks SQL access: Grants access to Databricks SQL.

Enable Personal Access Tokens in Advanced Settings.

Navigate to Settings > Workspace Admin > Advanced.

Find the Personal Access Tokens section and ensure it is enabled.

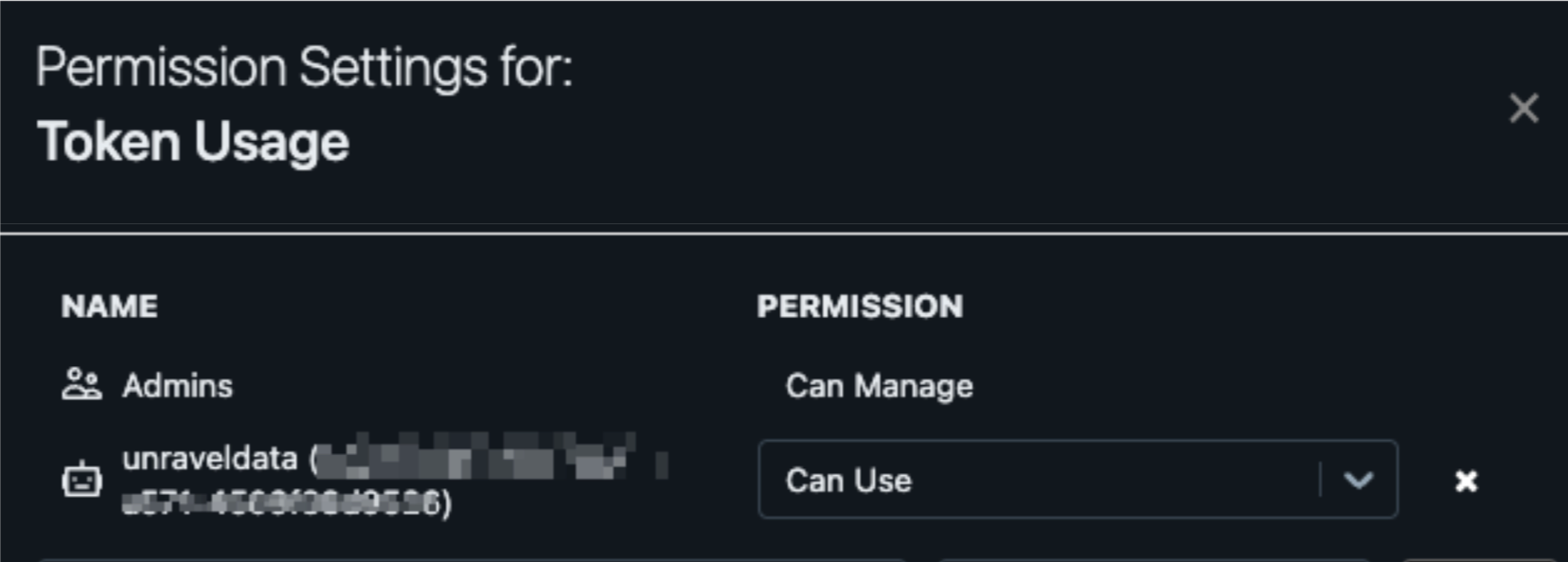

Assign CAN USE Permission for Token Usage to the Service Principal.

Serverless Function

By default, non-admin tokens, including those used by service principals, do not have access to all users’ clusters and jobs in a Databricks workspace. Additionally, Databricks does not currently provide a built-in method to grant permissions to clusters and jobs that have not yet been created.

To address this, you must deploy a serverless function (AWS Lambda or Azure Function) in your environment. This function should run every minute with an admin token and automatically grant the following permissions to the Unravel service principal for any newly created resources:

CAN_ATTACH_TO permission for clusters

CAN_VIEW permission for jobs for jobs, pipelines, and SQL warehouses

For assistance with setting up this serverless function, contact Unravel support.

Sensor Deployment

An initialization script deploys a JAR-based Unravel sensor onto all compute instances within the workspace. This deployment is managed automatically via a cluster-scoped policy, so developers do not need to modify their code.

Init Script

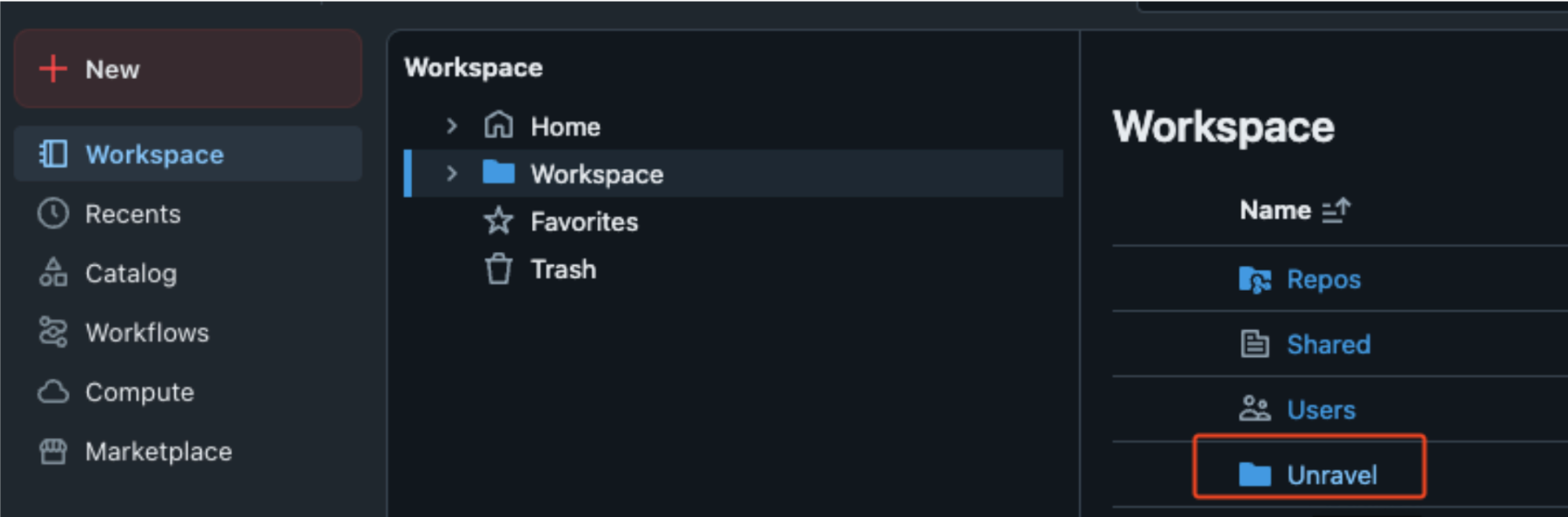

Create a folder named Unravel in the workspace directory.

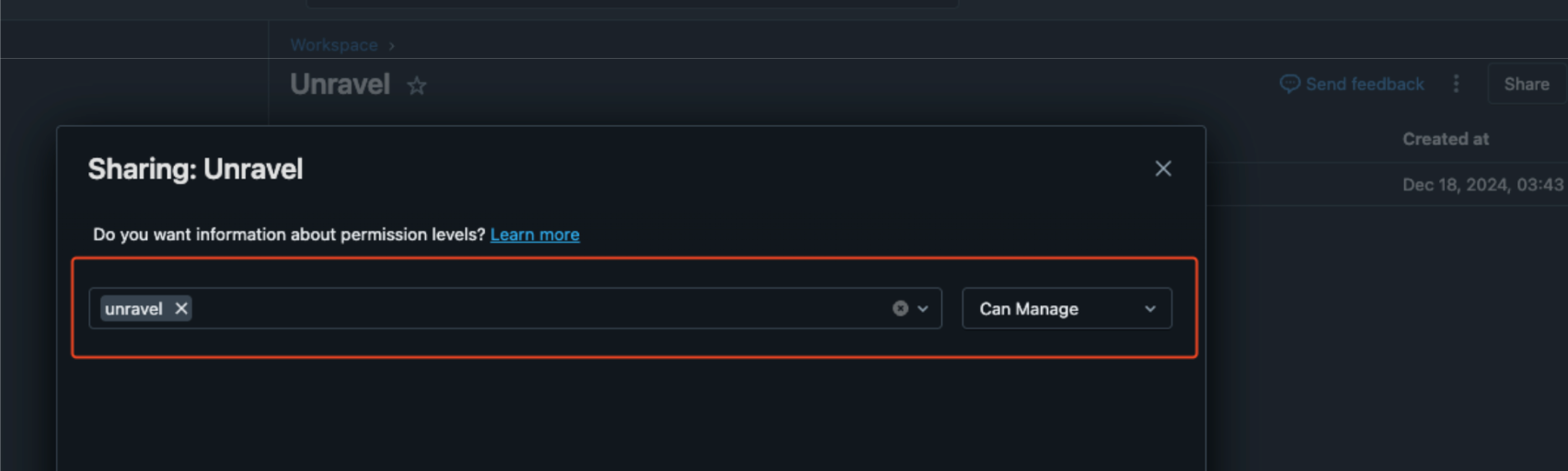

Assign CAN_MANAGE permissions to the Unravel service principal.

Cluster-Scoped Policy

Unravel recommends loading the Unravel init script using cluster-scoped init policies. This ensures the sensor is deployed to every compute instance automatically.

Apply the following property to a new or existing cluster-scoped policy:

{ "clusters": [ { "init_scripts": [ { "workspace": { "destination": "$INIT_SCRIPT_PATH" } } ] } ]}Replace $INIT_SCRIPT_PATH with the actual path to your Unravel init script (for example, /Workspace/Unravel/install-unravel.sh).

Network Components

By default, Unravel sensors send data to the Unravel data plane using TLS v1.2 encryption. This ensures that all traffic is encrypted while in transit.

If your organization needs to ensure that data does not traverse the public internet, use a PrivateLink endpoint to securely transmit Unravel sensor data. This approach keeps all traffic within your cloud provider’s private network.

Use a PrivateLink Endpoint

A PrivateLink endpoint allows Unravel sensor data to reach the Unravel data plane without any internet exposure.

To set up a PrivateLink endpoint:

Contact Unravel support to request a PrivateLink connection to your endpoint.

Verify the endpoint request in your cloud provider’s console.

Select your Databricks workspace VPC as the destination for the PrivateLink endpoint.

This configuration ensures that all Unravel sensor data is transmitted privately and securely, meeting strict network and compliance requirements.

Unravel Configuration

Register a new Databricks workspace or edit details of an existing Databricks workspace. During configuration, Unravel automatically copies the initialization script and sensor files to your workspace.

Sign in to Unravel UI, and from the upper right, click

> Workspaces. The Workspaces Manager page is displayed.

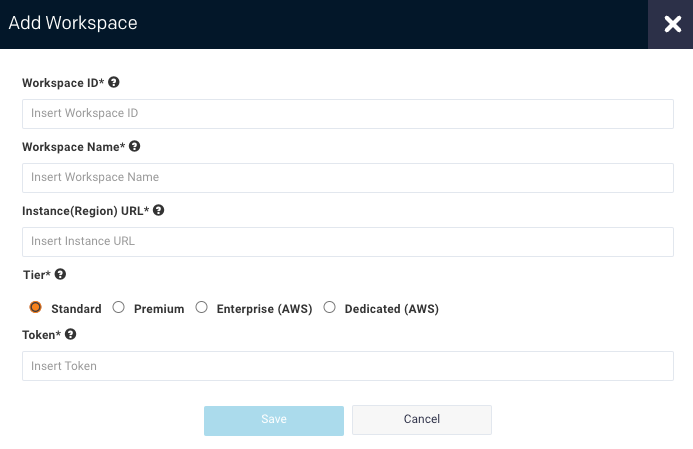

> Workspaces. The Workspaces Manager page is displayed.Click Add Workspace. The Add Workspace dialog box is displayed. Enter the following details:

Field

Description

Workspace Id

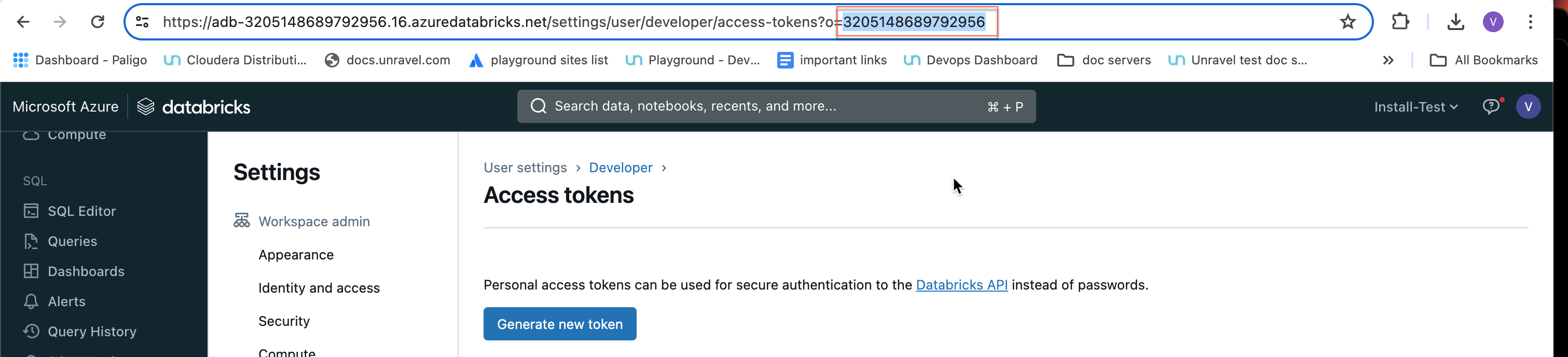

Databricks workspace ID can be found in the Databricks URL.

The numbers shown after o= in the Databricks URL become the workspace ID.

For example, in this URL:https://<databricks-instance>/?o=3205148689792956, the Databricks workspace ID is the number after o=, which is 3205148689792956.

Workspace Name

Databricks workspace name. A name for the workspace. For example,

ACME-Workspace. The Workspace name can be got from the Azure portal.Instance (Region) URL

Regional URL where the Databricks workspace is deployed. Specify the complete URL. The expected format is protocol://dns or ip(:port). Ensure that the URL does not end with a slash. For example, a valid input is: https://eastus.azuredatabricks.net. An invalid input is: https://eastus.azuredatabricks.net/.

The URL can be got from the Azure portal.

Tier

Select a subscription option from: Standard, Premium, Enterprise, and Dedicated. For Databricks Azure, you can get the pricing information from the Azure portal. For Databricks AWS you can get detailed information about pricing tiers from Databricks AWS pricing.

Token

Use the personal access token generated earlier for the Service Principal.

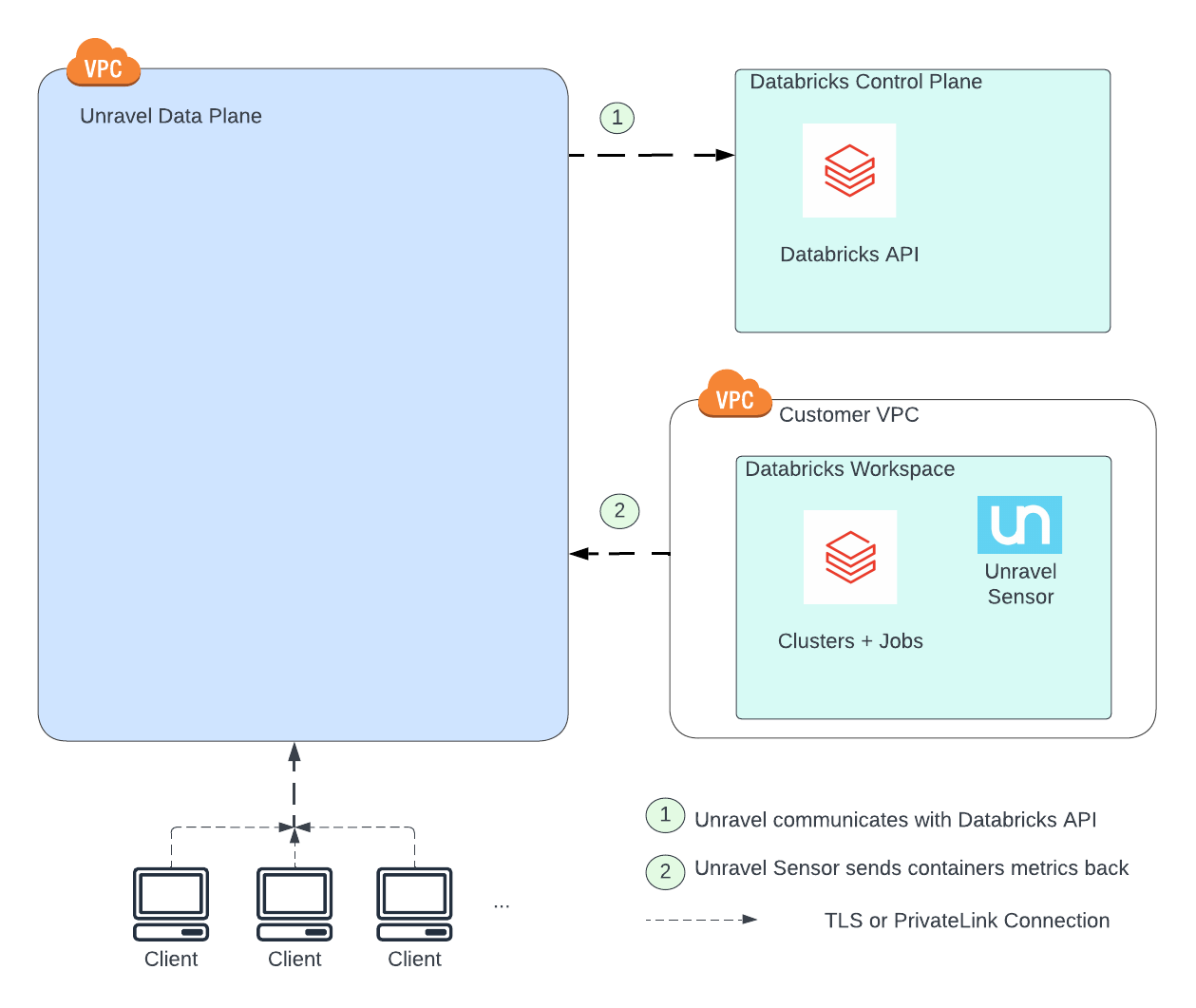

The reference architecture for Unravel SaaS with Databricks is illustrated in the diagram below. It highlights the main components and their interactions:

Unravel fetches cluster, job, and other required information with the help of Databricks API.

Unravel Sensor is deployed on each monitored cluster to collect cluster metrics.

Connectivity Descriptions

Reference connections from the architecture diagram | Method | Authentication | Encryption-In-Transit |

|---|---|---|---|

1 | API | Choice of either Databricks Personal Access Token or Service Principal Name | TLS over HTTPS, port 443 Unravel connects to Databricks API endpoint |

2 | API | Choice of Unravel basic auth or Azure Active Directory (AAD) auth (via SAML 2) | TLS over HTTPS, port 443 Databricks connects to Unravel API endpoint |

Verify Configuration

Check that the Unravel sensor is deployed on all relevant clusters.

Monitor logs in the Unravel UI.

Verify that cluster and job data is being collected and displayed correctly.