Installing Unravel in a multi-cluster environment

The Multi-cluster feature allows you to manage multiple clusters from a single Unravel installation. Unravel 4.7 supports managing one or more clusters of the same cluster type. Supported cluster types include Cloudera Distribution of Apache Hadoop (CDH), Cloudera Data Platform (CDP), Hortonworks Data Platform (HDP), and Amazon Elastic MapReduce (EMR).

Note

Unravel multi-cluster support is available only for fresh installs. Unravel does not support multi-cluster management of combined on-prem and cloud clusters.

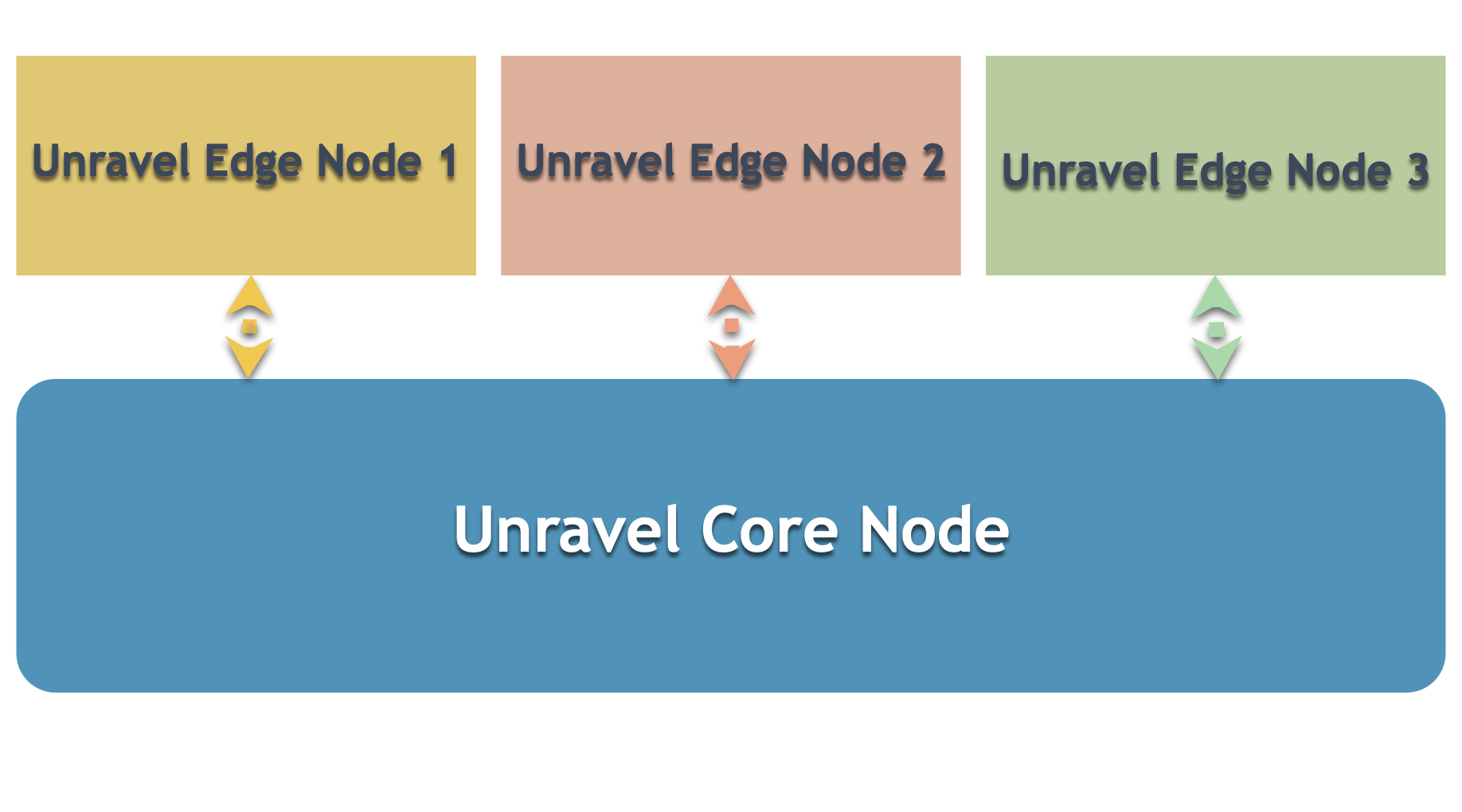

Multi-cluster deployment involves installing Unravel on the core node and one or more edge nodes. To know more about the multi-cluster architecture, refer to the Multi-cluster deployment layout. The following image depicts the basic layout of multi-cluster deployment.

To install and configure Unravel in a multi-cluster setup, check that the pre-requisites are fulfilled, and then do the following:

After the installation, you can also set additional configurations if required.

1. Install and set up Unravel on the core node

To install and setup Unravel on the core node, do the following:

Notice

If you already have a single cluster installation of Unravel, you can skip the following instructions to set up Unravel on a core node and proceed to Step 2 (Install and set up Unrav el on the edge node.)

1. Download Unravel

2. Deploy Unravel binaries

Unravel binaries are available as a tar file or RPM package. You can deploy the Unravel binaries in any directory on the server. However, the user who installs Unravel must have the write permissions to the directory where the Unravel binaries are deployed.

If the binaries are deployed to <Unravel_installation_directory>, Unravel will be available in <Unravel_installation_directory>/unravel. The directory layout for the Tar and RPM will be unravel/versions/<Directories and files>.

The following steps to deploy Unravel from a tar file must be performed by a user who will run Unravel.

Create an Installation directory.

mkdir

/path/to/installation/directoryFor example: mkdir /opt/unravel

Note

Some locations may require root access to create a directory. In such a case, after the directory is created, change the ownership to unravel user and continue with the installation procedure as the unravel user.

chown -R

username:groupname/path/to/installation/directoryFor example: chown -R unravel:unravelgroup /opt/unravel

Extract and copy the Unravel tar file to the installation directory, which was created in the first step. After you extract the contents of the tar file,

unraveldirectory is created within the installation directory.tar -zxf unravel-

<version>tar.gz -C/<Unravel-installation-directory>For example: tar -zxf unravel-4.7.x.x.tar.gz -C /opt The Unravel directory will be available within /opt

Important

A root user should perform the following steps to deploy Unravel from an RPM package. After the RPM package is deployed, the remaining installation procedures should be performed by the unravel user.

Create an installation directory.

mkdir /usr/local/unravel

Run the following command:

rpm -i unravel-

<version>.rpmFor example: rpm -i unravel-4.7.x.x.rpm

In case you want to provide a different location, you can do so by using the --prefix command. For example:

mkdir /opt/unravel chown -R

username:groupname/opt/unravel rpm -i unravel-4.7.0.0.rpm --prefix /optThe Unravel directory is available in /opt.

Grant ownership of the directory to a user who runs Unravel. This user executes all the processes involved in Unravel installation.

chown -R

username:groupname/usr/local/unravelFor example: chown -R unravel:unravelgroup /usr/local/unravel The Unravel directory is available in /usr/local.

Continue with the installation procedures as Unravel user.

3. Run the setup

You can install Unravel with an Unravel-supported database using the setup command. The setup command can be run with many other options. Refer to Setup options.

Whenever the setup command is run, the Precheck program is run automatically. This program detects issues that prevented a successful installation and provides suggestions to resolve them. Check Precheck filters for the expected value for each filter.

The setup command can be run with additional parameters to install Unravel with any Unravel-supported databases. Unravel-managed PostgreSQL, shipped with Unravel, does not need extra parameters with the setup command.

Tip

The Unravel data and configurations are located in the data directory. By default, the installer maintains the data directory under <Unravel installation directory>/unravel/data.

It is recommended to keep the data directory outside the unravel directory. To provide a different data directory location other than the default location, pass an extra parameter (--data-directory path/to/the/data/directory) with the setup command.

This section provides instructions to install Unravel with Unravel-managed PostgreSQL and external PostgreSQL.

After deploying the binaries, if you are the root user, switch to Unravel user.

su -

<unravel user>Run setup command and pass extra parameters to install Unravel with any of the following databases.

Note

The commands are different based on whether the core node, where you run the setup command, is a Hadoop client node or not.

Core node with Hadoop configuration

<Unravel installation directory>/unravel/versions/

<Unravel version>/setup ##Example: /opt/unravel/versions/abcd.1234/setupCore node without Hadoop configuration

<Unravel installation directory>/unravel/versions/

<Unravel version>/setup --enable-core ##Example: /opt/unravel/versions/abcd.1234/setup --enable-core

Precheck is run automatically when you run the setup command. Check the Precheck output to view issues that prevented a successful installation and suggestions for resolving them. You must resolve each of the issues before proceeding. See Precheck filters.

After the prechecks are resolved, you must re-login or reload the shell to execute the setup command again.

Notice

If you are using Unravel managed PostgreSQL database, and the Hive metastore is using MySQL, refer Set up Unravel Managed PostgreSQL for Hive metastore with MySQL

After deploying the binaries, if you are the root user, switch to Unravel user.

su -

<unravel user>Run setup command and pass extra parameters to install Unravel with any of the following databases.

Note

The commands are different based on whether the core node, where you run the setup command, is a Hadoop client node or not.

Core node with Hadoop configuration

<unravel_installation_directory>/versions/

<Unravel version>/setup --external-database postgresql<HOST><PORT><SCHEMA><USERNAME><PASSWORD>##Example: /opt/unravel/versions/abcd.1234/setup --external-database postgresql xyz.unraveldata.com 5432 unravel_db_prod unravel unraveldataCore node without Hadoop configuration

<unravel_installation_directory>/versions/

<Unravel version>/setup --enable-core --external-database postgresql<HOST><PORT><SCHEMA><USERNAME><PASSWORD>##Example: /opt/unravel/versions/abcd.1234/setup --enable-core --external-database postgresql xyz.unraveldata.com 5432 unravel_db_prod unravel unraveldataNotice

The

<HOST><PORT><SCHEMA><USERNAME><PASSWORD>are optional fields and are prompted if missing.

Precheck is run automatically when you run the setup command. Check the Precheck output to view issues that prevented a successful installation and suggestions for resolving them. You must resolve each of the issues before proceeding. See Precheck filters.

After the prechecks are resolved, you must re-login or reload the shell to execute the setup command again.

Note

In certain situations, you can skip the precheck using the setup --skip-precheck command.

For example:

/opt/unravel/versions/<Unravel version>/setup --skip-precheck

You can also skip the checks that you know can fail. For example, if you want to skip the Check limits option and the Disk freespace option, pick the command within the parenthesis corresponding to these failed options and run the setup command as follows:

setup --filter-precheck ~check_limits,~check_freespace

This section provides instructions to install Unravel with Unravel-managed MySQL and external MySQL.

Important

The following packages must be installed for fulfilling the OS-level requirements for MySQL:

numactl-libs (for libnuma.so)libaio (for libaio.so)

After deploying the binaries, if you are the root user, switch to Unravel user.

su -

<unravel user>Create a directory. For example,

mysqldirectory in/tmp. Provide permissions and make them accessible to the user who installs Unravel.Download the following tar files (MySQL 5.7 or 8.0) to this directory and provide the directory path when you run setup to install Unravel with Unravel-managed MySQL.

For MySQL 5.7

mysql-5.7.x-linux-glibc2.12-x86_64.tar.gzormysql-connector-java-5.1.x.tar.gz

For MySQL 8.0

mysql-8.0.x-linux-glibc2.12-x86_64.tar.gzmysql-connector-java-8.0.x.tar.gz

Run setup command and pass extra parameters to install Unravel with any of the following databases.

Note

The commands are different based on whether the core node, where you run the setup command, is a Hadoop client node or not.

Core node with Hadoop configuration

<unravel_installation_directory>/versions/

<Unravel version>/setup --extra /path/to/directory with MySQL tar files ##Example: /opt/unravel/versions/abcd.1234/setup --extra /tmp/mysqlCore node without Hadoop configuration

<unravel_installation_directory>/versions/

<Unravel version>/setup --enable-core --extra /path/to/directory with MySQL tar files ##Example: /opt/unravel/versions/abcd.1234/setup --enable-core --extra tmp/mysql

Precheck is run automatically when you run the setup command. Check the Precheck output to view issues that prevented a successful installation and suggestions for resolving them. You must resolve each of the issues before proceeding. See Precheck filters.

After the prechecks are resolved, you must re-login or reload the shell to execute the setup command again.

To install Unravel with an external MySQL database, you must provide the JDBC connector. This can either be as a tar file or as a jar file.

Create unravel schema and user on the target database where the unravel user should have full access to the schema.

##Example: CREATE DATABASE unravel_mysql_prod; CREATE USER 'unravel'@'localhost' IDENTIFIED BY 'password'; GRANT ALL PRIVILEGES ON unravel_mysql_prod.* TO 'unravel'@'localhost';

Create a directory for MySQL in

/tmp. Provide permissions and make them accessible to the user who installs Unravel.Add the JDBC connector to

/tmp/<MySQL-directory/jdbcconnector>directory. This can be either a tar file or a jar file.Run the setup command as an Unravel user to install Unravel with an external MySQL database:

Note

The commands are different based on whether the core node, where you run the setup command, is a Hadoop client node or not.

Core node with Hadoop configuration

<unravel_installation_directory>/versions/

<Unravel version>/setup --extra /tmp/<mysql-jdbc-directory> --external-database mysql<HOST><PORT><SCHEMA><USERNAME><PASSWORD>##Example: /opt/unravel/versions/abcd.1234/setup --extra /tmp/mysql-jdbc-connector --external-database mysql xyz.unraveldata.com 3306 unravel_db_prod unravel unraveldataCore node without Hadoop configuration

<unravel_installation_directory>/versions/

<Unravel version>/setup --enable-core --extra /tmp/<mysql-jdbc-directory> --external-database mysql<HOST><PORT><SCHEMA><USERNAME><PASSWORD>##Example: /opt/unravel/versions/abcd.1234/setup --enable-core --extra /tmp/mysql-jdbc-connector --external-database mysql xyz.unraveldata.com 3306 unravel_db_prod unravel unraveldataNotice

The

<HOST><PORT><SCHEMA><USERNAME><PASSWORD>are optional fields and are prompted if missing.

Precheck is run automatically when you run the setup command. Check the Precheck output to view issues that prevented a successful installation and suggestions for resolving them. You must resolve each of the issues before proceeding. See Precheck filters.

After the prechecks are resolved, you must re-login or reload the shell to execute the setup command again.

This section provides instructions to install Unravel with Unravel-managed MariaDB and external MariaDB.

Important

The following packages must be installed for fulfilling the OS level requirements for MariaDB:

numactl-libs (for libnuma.so)libaio (for libaio.so)

After deploying the binaries, if you are the root user, switch to Unravel user.

su -

<unravel user>Create a directory. For example,

mariadbdirectory in/tmp. Provide permissions and make them accessible to the user who installs Unravel.Download the following tar files to this directory and provide the directory path when you run setup to install Unravel with Unravel-managed MariaDB.

mariadb-<version>-linux-x86_64.tar.gzmariadb-java-client-<version>.jar

Run setup command and pass extra parameters to install Unravel with any of the following databases.

Note

The commands are different based on whether the core node, where you run the setup command, is a Hadoop client node or not.

Core node with Hadoop configuration

<unravel_installation_directory>/versions/

<Unravel version>/setup --extra /path/to/directory with MariaDB files ##For example: /opt/unravel/versions/abcd.1234/setup --extra /tmp/mariadbCore node without Hadoop configuration

<unravel_installation_directory>/versions/

<Unravel version>/setup --enable-core --extra /path/to/directory with MariaDB files ##Example: /opt/unravel/versions/abcd.1234/setup --enable-core --extra tmp/mariadb

Precheck is run automatically when you run the setup command. Check the Precheck output to view issues that prevented a successful installation and suggestions for resolving them. You must resolve each of the issues before proceeding. See Precheck filters.

After the prechecks are resolved, you must re-login or reload the shell to execute the setup command again.

Create unravel schema and user on the target database where the unravel user should have full access to the schema.

##Example: CREATE DATABASE unravel_mariadb_prod; CREATE USER 'unravel'@'localhost' IDENTIFIED BY 'password'; GRANT ALL PRIVILEGES ON unravel_mariadb_prod.* TO 'unravel'@'localhost';

Create a directory. For example,

mariadbdirectory in/tmp. Provide permissions and make them accessible to the user who installs Unravel.Add the JDBC connector to

/tmp/<MariaDB-directory/jdbcconnector>directory. This can be either a tar file or a jar file.Run the setup command as an Unravel user to install Unravel with an external MariaDB database:

Note

The commands are different based on whether the core node, where you run the setup command, is a Hadoop client node or not.

Core node with Hadoop configuration

<unravel_installation_directory>/versions/

<Unravel version>/setup --extra /path/to/mariadb-jdbc-directory --external-database mariadb<HOST><PORT><SCHEMA><USERNAME><PASSWORD>##Example: /opt/unravel/versions/abcd.1234/setup --extra /tmp/mariadb-jdbc-connector --external-database mariadb xyz.unraveldata.com 3306 unravel_db_prod unravel unraveldataCore node without Hadoop configuration

<unravel_installation_directory>/versions/

<Unravel version>/setup --enable-core --extra /path/to/mariadb-jdbc-directory --external-database mariadb<HOST><PORT><SCHEMA><USERNAME><PASSWORD>##Example: /opt/unravel/versions/abcd.1234/setup --enable-core --extra /tmp/mariadb-jdbc-connector --external-database mariadb xyz.unraveldata.com 3306 unravel_db_prod unravel unraveldataNotice

The

<HOST><PORT><SCHEMA><USERNAME><PASSWORD>are optional fields and are prompted if missing.

Precheck is run automatically when you run the setup command. Check the Precheck output to view issues that prevented a successful installation and suggestions for resolving them. You must resolve each of the issues before proceeding. See Precheck filters.

After the prechecks are resolved, you must re-login or reload the shell to execute the setup command again.

Tip

Run --help with the setup command and any combination of the setup command for complete usage details.

<unravel_installation_directory>/versions/<Unravel version>/setup --help

Note

In certain situations, you can skip the precheck using the setup --skip-precheck command.

For example:

/opt/unravel/versions/<Unravel version>/setup --skip-precheck

You can also skip the checks that you know can fail. For example, if you want to skip the Check limits option and the Disk freespace option, pick the command within the parenthesis corresponding to these failed options and run the setup command as follows:

setup --filter-precheck ~check_limits,~check_freespace

Following is a sample of the Precheck run result:

/opt/unravel/versions/abcd.1011/setup --enable-core --extra /tmp/mysql 2021-04-06 16:30:19 Sending logs to: /tmp/unravel-setup-20210406-163019.log 2021-04-06 16:30:19 Running preinstallation check... 2021-04-06 16:30:21 Gathering information ................ Ok 2021-04-06 16:30:21 Running checks ............... Ok -------------------------------------------------------------------------------- system Check limits : PASSED Clock sync : PASSED CPU requirement : PASSED, Available cores: 8 cores Disk access : PASSED, /opt/unravel/versions/abcd.1011/healthcheck/healthcheck/plugins/system is writable Disk freespace : PASSED, 213 GB of free disk space is available for precheck dir. Kerberos tools : PASSED Memory requirement : PASSED, Available memory: 95 GB Network ports : PASSED OS libraries : PASSED OS release : PASSED, OS release version: centos 7.9 OS settings : PASSED SELinux : PASSED Healthcheck report bundle: /tmp/healthcheck-20210406163019-xyz.unraveldata.com.tar.gz 2021-04-06 16:30:21 Found package: /tmp/mysql/mysql-5.7.27-linux-glibc2.12-x86_64.tar.gz 2021-04-06 16:30:21 Found package: /tmp/mysql/mysql-connector-java-5.1.48.tar.gz 2021-04-06 16:30:21 Prepare to install with: /opt/unravel/versions/abcd.1011/installer/installer/../installer/conf/presets/default.yaml 2021-04-06 16:30:25 Sending logs to: /opt/unravel/logs/setup.log 2021-04-06 16:30:25 Installing mysql server ............................................................................................................................................................................................................................................................................................................................................................................................ Ok 2021-04-06 16:30:42 Instantiating templates ......................................................................................................................................................................................................................... Ok 2021-04-06 16:30:47 Creating parcels .................................... Ok 2021-04-06 16:31:00 Installing sensors file ............................ Ok 2021-04-06 16:31:00 Installing pgsql connector ... Ok 2021-04-06 16:31:00 Installing mysql connector ... Ok 2021-04-06 16:31:02 Starting service monitor ... Ok 2021-04-06 16:31:07 Request start for elasticsearch_1 .... Ok 2021-04-06 16:31:07 Waiting for elasticsearch_1 for 120 sec ......... Ok 2021-04-06 16:31:14 Request start for zookeeper .... Ok 2021-04-06 16:31:14 Request start for kafka .... Ok 2021-04-06 16:31:14 Waiting for kafka for 120 sec ...... Ok 2021-04-06 16:31:16 Waiting for kafka to be alive for 120 sec ..... Ok 2021-04-06 16:31:20 Initializing mysql ... Ok 2021-04-06 16:31:27 Request start for mysql .... Ok 2021-04-06 16:31:27 Waiting for mysql for 120 sec ...... Ok 2021-04-06 16:31:29 Creating database schema ........ Ok 2021-04-06 16:31:31 Generating hashes .... Ok 2021-04-06 16:31:32 Loading elasticsearch templates ............ Ok 2021-04-06 16:31:35 Creating kafka topics .................... Ok 2021-04-06 16:32:10 Creating schema objects ................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................... Ok 2021-04-06 16:33:07 Request stop .................................................................... Ok 2021-04-06 16:33:26 Done

After you run the setup command, you must set Unravel license.

If the license has any issues, an error message is displayed. To view and resolve the licensing error messages, see Licensing error messages and troubleshooting.

Set the path of a license file.

<Unravel installation directory>/unravel/manager config license set

<path of a license file>Example:

/opt/unravel/manager config license set /tmp/license.txtThis command takes a filename as input and performs the following actions:

Reads the license file path and the license file

The license YAML file contains product licensing information, license validity and expiration date, and the licensed number of clusters and nodes.

Verifies whether it is a valid license

Adds the com.unraveldata.license.file property to the

unravel.propertiesfile. For information, see License property.

Note

If you do not provide the license filename, the

manager config license setcommand prompts for the license information. You can copy the content of the license file.Sample content of the license file:

##### BEGIN UNRAVEL LICENSE Licensee : ACME Disintegrating Pistol Manufacturing Valid from : 2022-12-16 00:00:00 UTC Expire after : 2023-10-16 23:59:00 UTC License type : Enterprise Licensed number of nodes : 1000000 Signature : c2Uvb2JqLnRhcmdldC92OF9pbml0aWFsaXplcnMvZ2VuL3RvcnF1ZS Revision : 1 ##### END UNRAVEL LICENSE #####

Apply the changes.

<Unravel installation directory>/unravel/manager config apply --restart

4. Add configurations

Optional: Set up Kerberos to authenticate Hadoop services.

Kerberos authentication

If you use Kerberos authentication, set the principal path and keytab, enable Kerberos authentication, and apply the changes.

<Unravel installation directory>/unravel/manager config kerberos set --keytab

</path/to/keytab file>--principal<server@example.com><Unravel installation directory>/unravel/manager config kerberos enable <unravel_installation_directory>/manager config applyTruststore certificates

If you are using Truststore certificates, run the following steps from the manager tool to add certificates to the Truststore.

Download the certificates to a directory.

Provide permissions to the user, who installs unravel, to access the certificates directory.

chown -R

username:groupname/path/to/certificates/directoryUpload the certificates using any of the following options:

## Option 1 <unravel_installation_directory>/unravel/manager config tls trust add

</path/to/the/certificate/filesor ## Option 2 <unravel_installation_directory>/unravel/manager config tls trust add --pem</path/to/the/certificate/files><unravel_installation_directory>/unravel/manager config tls trust add --jks</path/to/the/certificate/files><unravel_installation_directory>/unravel/manager config tls trust add --pkcs12</path/to/the/certificate/files>Enable the Truststore.

<unravel_installation_directory>/unravel/manager config tls trust

<enable|disable><unravel_installation_directory>/unravel/manager config applyVerify the connection.

<unravel_installation_directory>/unravel/manager verify connect <Cluster Manager-host> <Cluster Manager-port>

For example: /opt/unravel/manager verify connect xyz.unraveldata.com 7180 -- Running: verify connect xyz.unraveldata.com 7180 - Resolved IP: 111.17.4.123 - Reverse lookup: ('xyz.unraveldata.com', [], ['111.17.4.123']) - Connection: OK - TLS: No -- OK

TLS for Unravel UI

If you want to enable TLS for Unravel UI, refer to Enabling Transport Layer Security (TLS) for Unravel UI.

Start all the services.

<unravel_installation_directory>/unravel/manager start

Check the status of services.

<unravel_installation_directory>/unravel/manager report

The following service statuses are reported:

OK: Service is up and running

Not Monitored: Service is not running. (Has stopped or has failed to start)

Initializing: Services are starting up.

Does not exist: The process unexpectedly disappeared. Restarts will be attempted 10 times.

You can also get the status and information for a specific service. Run the manager report command as follows:

<unravel_installation_directory>/unravel/manager report <service> ## For example: /opt/unravel/manager report auto_action

Optionally, you can run healthcheck, at this point, to verify that all the configurations and services are running successfully.

<unravel_installation_directory>/unravel/manager healthcheck

Healthcheck is run automatically on an hourly basis in the backend. You can set your email to receive the healthcheck reports. Refer to Healthcheck for more details.

2. Install and set up Unravel on the edge node

To install and setup Unravel on the edge node, do the following:

1. Download Unravel

2. Deploy Unravel binaries

Unravel binaries are shipped as a tar file and an RPM package. You can deploy the Unravel binaries in any directory on the server. However, the user who installs Unravel must have write permissions to the directory where Unravel binaries are deployed.

After the Unravel binaries are deployed, the directory layout for the Tar and RPM will be unravel/versions/<Directories and files>. The binaries are deployed to <Unravel_installation_directory>, and Unravel will be available in <Unravel_installation_directory/unravel.

The following steps to deploy Unravel from a tar file should be performed by a user who will run Unravel.

Create an Installation directory.

mkdir

/path/to/installation/directory## For example: mkdir /opt/unravelNote

Some locations may require root access to create a directory. In such a case, after the directory is created, change the ownership to unravel user and continue with the installation procedure as the unravel user.

chown -R

username:groupname/path/to/installation/directory## For example:chown -R unravel:unravelgroup /opt/unravelExtract and copy the Unravel tar file to the installation directory, which was created in the first step. After you extract the contents of the tar file,

unraveldirectory is created within the installation directory.tar -zxf unravel-

<version>tar.gz -C /path/to/installation/directory ## For example: tar -zxf unravel-4.7.0.0.tar.gz -C /opt ## The unravel directory will be available within /opt

A root user should perform the following steps to deploy Unravel from an RPM package. After the RPM package is deployed, the remaining installation procedures should be performed by the unravel user.

Create an installation directory.

mkdir /usr/local/unravel

Run the following command:

rpm -i unravel-

<version>.rpm ## For example: rpm -i unravel-4.7.0.0.rpm ## The unravel directory will be available in /usr/localIn case you want to provide a different location, you can do so by using the --prefix command. For example:

mkdir /opt/unravel chown -R

username:groupname/opt/unravel rpm -i unravel-4.7.0.0.rpm --prefix /opt ## The unravel directory will be available in /optGrant ownership of the directory to a user who will run Unravel. This user executes all the processes involved in Unravel installation.

chown -R

username:groupname/usr/local/unravel ## For example: chown -R unravel:unravelgroup /usr/local/unravelContinue with the installation procedures as unravel user.

3. Install and set up Unravel on the edge nodes

Notice

Perform the following steps on each of the edge nodes in the cluster.

After deploying the binaries, if you are the root user, switch to Unravel user.

su -

<unravel user>Run setup as follows:

<installation_directory>/versions/4.7.x.x/setup --cluster-access<Unravel-host>##<Unravel-host>: specify the FQDN or the logical hostname of Unravel core node./opt/unravel/versions/develop.1002/setup --cluster-access xyz.unraveldata.com 2021-04-05 12:36:08 Sending logs to: /tmp/unravel-setup-20210405-123608.log 2021-04-05 12:36:08 Running preinstallation check... 2021-04-05 12:36:11 Gathering information ................. Ok 2021-04-05 12:36:35 Running checks .................. Ok -------------------------------------------------------------------------------- system Check limits : PASSED Clock sync : PASSED CPU requirement : PASSED, Available cores: 8 cores Disk access : PASSED, /opt/unravel/versions/develop.1002/healthcheck/healthcheck/plugins/system is writable Disk freespace : PASSED, 228 GB of free disk space is available for precheck dir. Kerberos tools : PASSED Memory requirement : PASSED, Available memory: 95 GB Network ports : PASSED OS libraries : PASSED OS release : PASSED, OS release version: centos 7.6 OS settings : PASSED SELinux : PASSED -------------------------------------------------------------------------------- hadoop Clients : PASSED - Found hadoop - Found hdfs - Found yarn - Found hive - Found beeline Distribution : PASSED, found CDP 7.1.3 RM HA Enabled/Disabled : PASSED, Disabled Healthcheck report bundle: /tmp/healthcheck-20210405123609-wnode58.unraveldata.com.tar.gz 2021-04-05 12:36:37 Prepare to install with: /opt/unravel/versions/develop.1002/installer/installer/../installer/conf/presets/cluster-access.yaml 2021-04-05 12:36:42 Sending logs to: /opt/unravel/logs/setup.log 2021-04-05 12:36:42 Instantiating templates ................................ Ok 2021-04-05 12:36:53 Starting service monitor ... Ok 2021-04-05 12:36:57 Generating hashes .... Ok 2021-04-05 12:37:00 Request stop ..... Ok 2021-04-05 12:37:02 DoneSet the path of a license file.

<Unravel installation directory>/unravel/manager config license set

<license filename>This command takes a filename as input and performs the following actions:

Reads the license file path and the license file

The license YAML file contains product licensing information, license validity and expiration date, and the licensed number of clusters and nodes.

Verifies whether it is a valid license

Adds the com.unraveldata.license.file property to the

unravel.propertiesfile. For information, see License property.

Note

If you do not provide the license filename, the

manager config license setcommand prompts for the license information. You can copy the content of the license file.Run autoconfig and apply changes.

<unravel_installation_directory>/manager config auto <unravel_installation_directory>/manager config apply

When prompted, you can provide the following:

Cluster manager URL: Provide the URL of the cluster manager. For example: http://abcd79.unraveldata.com:3000, https://xyz.unraveldata.com:7100

Username

Password

Optional: Set up Kerberos authentication and secure access to Unravel UI.

Kerberos authentication

If you use Kerberos authentication, set the principal path and keytab, enable Kerberos authentication, and apply the changes.

<Unravel installation directory>/unravel/manager config kerberos set --keytab

</path/to/keytab file>--principal<server@example.com><Unravel installation directory>/unravel/manager config kerberos enable <unravel_installation_directory>/manager config applyTruststore certificates

If you are using Truststore certificates, run the following steps from the manager tool to add certificates to the Truststore.

Download the certificates to a directory.

Provide permissions to the user, who installs unravel, to access the certificates directory.

chown -R

username:groupname/path/to/certificates/directoryUpload the certificates using any of the following options:

## Option 1 <unravel_installation_directory>/unravel/manager config tls trust add

</path/to/the/certificate/filesor ## Option 2 <unravel_installation_directory>/unravel/manager config tls trust add --pem</path/to/the/certificate/files><unravel_installation_directory>/unravel/manager config tls trust add --jks</path/to/the/certificate/files><unravel_installation_directory>/unravel/manager config tls trust add --pkcs12</path/to/the/certificate/files>Enable the Truststore.

<unravel_installation_directory>/unravel/manager config tls trust

<enable|disable><unravel_installation_directory>/unravel/manager config applyVerify the connection.

<unravel_installation_directory>/unravel/manager verify connect <Cluster Manager-host> <Cluster Manager-port>

For example: /opt/unravel/manager verify connect xyz.unraveldata.com 7180 -- Running: verify connect xyz.unraveldata.com 7180 - Resolved IP: 111.17.4.123 - Reverse lookup: ('xyz.unraveldata.com', [], ['111.17.4.123']) - Connection: OK - TLS: No -- OK

Start all the services.

<unravel_installation_directory>/unravel/manager start

Check the status of services.

<unravel_installation_directory>/unravel/manager report

The following service statuses are reported:

OK: Service is up and running

Not Monitored: Service is not running. (Has stopped or has failed to start)

Initializing: Services are starting up.

Does not exist: The process unexpectedly disappeared. Restarts will be attempted 10 times.

You can also get the status and information for a specific service or for all services. Run the manager report command as follows:

<unravel_installation_directory>/unravel/manager report <service> ## For example:/opt/unravel/manager report healthcheck

Optionally, you can run healthcheck to verify that all the configurations and services are running successfully.

<unravel_installation_directory>/unravel/manager healthcheck

Healthcheck is run automatically, on an hourly basis, in the backend. You can set your email to receive the healthcheck reports.

3. Configure the core node with edge node settings

Log in to the core node as an Unravel user.

Obtain the Cluster Access ID, which must be provided when you add the edge nodes. Run the following command on the edge node:

<unravel_installation_directory>/unravel/manager support show cluster_access_id

Run the following command to get the

<EDGE_KEY>.<unravel_installation_directory>/unravel/manager config edge show

-- Running: config edge show ------------ | ---------------------------------------- | ------------ EDGE KEY | - edge-a | Enabled | Cluster manager: | Enabled | Clusters: | ------------ | ---------------------------------------- | ------------ -- OKAdd each edge node involved with Unravel monitoring to the core node.

Important

EDGE_KEY is any label that you provide to identify the edge node. Preferably provide the hostname of the edge node for quick identification later.

<unravel_installation_directory>/unravel/manager config edge add

<EDGE_KEY><CLUSTER_ACCESS_ID>Example:

/opt/unravel/manager config edge add edge-a 123-123-123-123Notice

When you are adding edge nodes for CDH or CDP platforms, and If you want to configure Migration or Forecasting reports for these clusters, then you must have one of the following roles:

Full Administrator

Cluster Administrator

Operator

Configurator

These roles are required only in the case of Cloudera manager.

Run auto-configuration.

<unravel_installation_directory>/unravel/manager config edge auto

<EDGE_KEY>For example:

/opt/unravel/manager config edge auto edge-aWhen prompted, you can provide the following:

Cluster manager URL: Provide the URL of the cluster manager. For example: https://abcd773.unraveldata.com:8443

Username

Password

If multiple clusters are handled by Cloudera Manager or Ambari, you are prompted to enable the cluster that you want to monitor. Run the following command to enable the cluster.

<unravel_installation_directory>/unravel/manager config edge cluster enableTip

When prompted, you can provide the following keys:

<EDGE-KEY>: The edge node that you have provided in Step 3.<CLUSTER_KEY>: Name of the cluster that you want to enable for Unravel monitoring. You can retrieve it from the output shown for the manager config auto command.<SERVICE_KEY>: Name of the service.

The Hive metastore database password can be recovered automatically only for a cluster manager with an administrative account. Otherwise, it must be set manually as follows:

Run the manager config edge show command to get the

<EDGE_KEY>,<HIVE_KEY>, and<CLUSTER_KEY>, which must be provided when you set the Hive metastore password.<EDGE_KEY>is the label you provide to identify the edge node when you add the edge node in Step 3.CLUSTER_KEYis the name of the cluster where you set the Hive configurations.<HIVE_KEY>is the definition of the Hive service. In the output of the manager config edge show command, this is shown as the <SERVICE_KEY> for Hive.

-- Running: config edge show ------------ | ---------------------------------------- | ------------ EDGE KEY | - edge-a | Enabled | Cluster manager: | Enabled | Clusters: | CLUSTER KEY | - Cluster_Name | Enabled | HBASE: | SERVICE KEY | - hbase | Enabled | HDFS: | SERVICE KEY | - hdfs | Enabled | HIVE: | SERVICE KEY | - hive | Enabled SERVICE KEY | - hive2 | Enabled | IMPALA: | SERVICE KEY | - impala | Enabled SERVICE KEY | - impala2 | Enabled | KAFKA: | SERVICE KEY | - kafka | Enabled SERVICE KEY | - kafka2 | Enabled | SPARK_ON_YARN: | SERVICE KEY | - spark_on_yarn | Enabled | YARN: | SERVICE KEY | - yarn | Enabled | ZOOKEEPER: | SERVICE KEY | - zookeeper | Enabled ------------ | ---------------------------------------- | ------------ -- OKIn a multi-cluster deployment, where edge nodes are monitoring, set the password on the core node as follows:

<Unravel installation directory>/unravel/manager config edge hive metastore password<EDGE_KEY><CLUSTER-KEY><HIVE-KEY><password>Example:

/opt/unravel/manager config edge hive metastore password local-node cluster1 hive passwordIn case, the core node is monitoring the Hadoop cluster directly, run the following command from the core node.

<Unravel installation directory>/unravel/manager config hive metastore password<CLUSTER_KEY><HIVE_KEY><password>Example:

/opt/unravel/manager config hive metastore password cluster1 hive P@SSw0rdIf you do not provide a password on the command line, the manager prompts for it. In this case, your password is not displayed on the screen.

Configure the FSImage. Refer to configuring FSImage .

Apply changes.

<unravel_installation_directory>/manager config apply

You may be prompted to stop Unravel. Run manager stop to stop Unravel.

Start all the services.

<unravel_installation_directory>/unravel/manager start

Check the status of services.

<unravel_installation_directory>/unravel/manager report

Check the list of services, which are enabled for the edge node after running the auto-configurations.

<unravel_installation_directory>/unravel/manager config edge show

-- Running: config edge show ------------ | ---------------------------------------- | ------------ EDGE KEY | - edge-a | Enabled | Cluster manager: | Enabled | Clusters: | CLUSTER KEY | - Cluster_Name | Enabled | HBASE: | SERVICE KEY | - hbase | Enabled | HDFS: | SERVICE KEY | - hdfs | Enabled | HIVE: | SERVICE KEY | - hive | Enabled SERVICE KEY | - hive2 | Enabled | IMPALA: | SERVICE KEY | - impala | Enabled SERVICE KEY | - impala2 | Enabled | KAFKA: | SERVICE KEY | - kafka | Enabled SERVICE KEY | - kafka2 | Enabled | SPARK_ON_YARN: | SERVICE KEY | - spark_on_yarn | Enabled | YARN: | SERVICE KEY | - yarn | Enabled | ZOOKEEPER: | SERVICE KEY | - zookeeper | Enabled ------------ | ---------------------------------------- | ------------ -- OKYou can disable any of the services. For example, you want to disable the HBase services:

<unravel_installation_directory>/unravel/manager config edge hbase disable

<EDGE_KEY><CLUSTER KEY><SERVICE NAME>Example:

/opt/unravel/manager config edge hbase disable edge-a Cluster_Name hbaseRun healthcheck to verify that all the configurations and services are running successfully.

<unravel_installation_directory>/manager healthcheck

Healthcheck is run automatically, on an hourly basis, in the backend. You can set your email to receive the healthcheck reports.

4. Install Unravel with Interactive Precheck

This section provides information about enabling additional instrumentation for the following platforms:

This topic explains how to enable additional instrumentation on your gateway/edge/client nodes that are used to submit jobs to your big data platform. Additional instrumentation can include:

Sensor jars packaged in a parcel on Unravel server.

Hive queries in Hadoop that are pushed to Unravel Server by the Hive Hook sensor, a JAR file.

Spark job performance metrics that are pushed to Unravel Server by the Spark sensor, a JAR file.

Copying Hive hook and Btrace jars to HDFS shared library path.

Impala queries that are pulled from the Cloudera Manager or from the Impala daemon

Sensor JARs are packaged in a parcel on Unravel server. Run the following steps from the Cloudera Manager to download, distribute, and activate this parcel.

Note

Ensure that Unravel is up and running before you perform the following steps.

In Cloudera Manager, click

. The Parcel page is displayed.

. The Parcel page is displayed.On the Parcel page, click Configuration or Parcel Repositories & Network settings. The Parcel Configurations dialog box is displayed.

Go to the Remote Parcel Repository URLs section, click + and enter the Unravel host along with the exact directory name for your CDH version.

http://

<unravel-host>:<port>/parcels/<cdh <major.minor version>/ // For example: http://xyznode46.unraveldata.com:3000/parcels/cdh6.3/<unravel-host>is the hostname or LAN IP address of Unravel. In a multi-cluster scenario, this would be the host where thelog_receiverdaemon is running.<port>is the Unravel UI port. The default is 3000. In case you have customized the default port, you can add that port number.<cdh-version>is your version of CDH. For example,cdh5.16orcdh6.3.You can go to

http://directory (For example: http://xyznode46.unraveldata.com:3000/parcels) and copy the exact directory name of your CDH version (CDH<major.minor>).<unravel-host>:<port>/parcels/

Note

If you're using Active Directory Kerberos,

unravel-hostmust be a fully qualified domain name or IP address.Tip

If you are running more than one version of CDH (for example, you have multiple clusters), you can add more than one parcel entry for

unravel-host.Click Save Changes.

In the Cloudera Manager, click Check for new parcels, find the

UNRAVEL_SENSORparcel that you want to distribute, and click the corresponding Download button.In the Cloudera Manager, from Location > Parcel Name, find the

UNRAVEL_SENSORparcel that you want to distribute and click the corresponding Download button.After the parcel is downloaded, click the corresponding Distribute button. This will distribute the parcel to all the hosts.

After the parcel is distributed, click the corresponding Activate button. The status column will now display Distributed, Activated.

Note

If you have an old sensor parcel from Unravel, you must deactivate it now.

In Cloudera Manager, select the target cluster from the drop-down, click Hive >Configuration, and search for

hive-env.In Gateway Client Environment Advanced Configuration Snippet (Safety Valve) for

hive-env.sh, click View as text and enter the following exactly as shown, with no substitutions:AUX_CLASSPATH=${AUX_CLASSPATH}:/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/unravel_hive_hook.jarIf Sentry is enabled, grant privileges on the JAR files to the Sentry roles that run Hive queries.

Sentry commands may also be needed to enable access to the Hive Hook JAR file. Grant privileges on the JAR files to the roles that run hive queries. Log in to Beeline as user

hiveand use the HiveSQL GRANTstatement to do so.For example (substitute

roleas appropriate),GRANT ALL ON URI 'file:///opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/unravel_hive_hook.jar' TO ROLE

<role>

In Cloudera Manager, select the target cluster from the drop-down, click Oozie >Configuration and check the path shown in ShareLib Root Directory.

From a terminal application on the Unravel node (edge node in case of multi-cluster.), pick up the ShareLib Root Directory directory path with the latest timestamp.

hdfs dfs -ls

<path to ShareLib directory>// For example: hdfs dfs -ls /user/oozie/share/lib/Important

The jars must be copied to the

libdirectory (with the latest timestamp), which is shown inShareLib Root Directory.Copy the Hive Hook JAR

/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/unravel_hive_hook.jarand the Btrace JAR,/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jarto the specified path in ShareLib Root Directory.For example, if the path specified in ShareLib Root Directory. is

/user/oozie, run the following commands to copy the JAR files.hdfs dfs -copyFromLocal /opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/unravel_hive_hook.jar /user/oozie/share/lib/

<latest timestamp lib directory>/ //For example: hdfs dfs -copyFromLocal /opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/unravel_hive_hook.jar /user/oozie/share/lib/lib_20210326035616/hdfs dfs -copyFromLocal /opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jar /user/oozie/share/lib/

<latest timestamp lib directory>/ //For example: hdfs dfs -copyFromLocal /opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jar /user/oozie/share/lib/lib_20210326035616/From a terminal application, copy the Hive Hook JAR

/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/unravel_hive_hook.jarand the Btrace JAR,/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jarto the specified path in ShareLib Root Directory.Caution

Jobs controlled by Oozie 2.3+ fail if you do not copy the Hive Hook and BTrace JARs to the HDFS shared library path.

On the Cloudera Manager, click Hive service and then click the Configuration tab.

Search for

hive-site.xml, which will lead to the Hive Client Advanced Configuration Snippet (Safety Valve) for hive-site.xml section.Specify the hive hook configurations. You have the option to either use the XML text field or Editor to specify the hive hook configuration.

Option 1: XML text field

Click View as XML to open the XML text field and copy-paste the following.

<property> <name>com.unraveldata.host</name> <value>

<UNRAVEL HOST NAME></value> <description>Unravel hive-hook processing host</description> </property> <property> <name>com.unraveldata.hive.hook.tcp</name> <value>true</value> </property> <property> <name>com.unraveldata.hive.hdfs.dir</name> <value>/user/unravel/HOOK_RESULT_DIR</value> <description>destination for hive-hook, Unravel log processing</description> </property> <property> <name>hive.exec.driver.run.hooks</name> <value>com.unraveldata.dataflow.hive.hook.UnravelHiveHook</value> <description>for Unravel, from unraveldata.com</description> </property> <property> <name>hive.exec.pre.hooks</name> <value>com.unraveldata.dataflow.hive.hook.UnravelHiveHook</value> <description>for Unravel, from unraveldata.com</description> </property> <property> <name>hive.exec.post.hooks</name> <value>com.unraveldata.dataflow.hive.hook.UnravelHiveHook</value> <description>for Unravel, from unraveldata.com</description> </property> <property> <name>hive.exec.failure.hooks</name> <value>com.unraveldata.dataflow.hive.hook.UnravelHiveHook</value> <description>for Unravel, from unraveldata.com</description> </property>Ensure to replace

UNRAVEL HOST NAMEwith the Unravel hostname. Replace TheUnravel Host Namewith the hostname of the edge node in case of a multi-cluster deployment.Option 2: Editor:

Click + and enter the property, value, and description (optional).

Property

Value

Description

com.unraveldata.host

Replace with Unravel hostname or with the hostname of the edge node in case of a multi-cluster deployment.

Unravel hive-hook processing host

com.unraveldata.hive.hook.tcp

true

Hive hook tcp protocol.

com.unraveldata.hive.hdfs.dir

/user/unravel/HOOK_RESULT_DIR

Destination directory for hive-hook, Unravel log processing.

hive.exec.driver.run.hooks

com.unraveldata.dataflow.hive.hook.UnravelHiveHook

Hive hook

hive.exec.pre.hooks

com.unraveldata.dataflow.hive.hook.UnravelHiveHook

Hive hook

hive.exec.post.hooks

com.unraveldata.dataflow.hive.hook.UnravelHiveHook

Hive hook

hive.exec.failure.hooks

com.unraveldata.dataflow.hive.hook.UnravelHiveHook

Hive hook

Note

If you configure CDH with Cloudera Navigator's safety valve setting, you must edit the following keys and append the value com.unraveldata.dataflow.hive.hook.UnravelHiveHook without any space.

hive.exec.post.hooks

hive.exec.pre.hooks

hive.exec.failure.hooks

For example:

<property> <name>hive.exec.post.hooks</name> <value>com.unraveldata.dataflow.hive.hook.UnravelHiveHook,com.cloudera.navigator.audit.hive.HiveExecHookContext,org.apache.hadoop.hive.ql.hooks.LineageLogger</value> <description>for Unravel, from unraveldata.com</description> </property>

Similarly, ensure to add the same hive hook configurations in HiveServer2 Advanced Configuration Snippet for hive-site.xml.

Optionally, add a comment in Reason for change and then click Save Changes.

From the Cloudera Manager page, click the Stale configurations icon (

) to deploy the configuration and restart the Hive services.

) to deploy the configuration and restart the Hive services.Check Unravel UI to see if all Hive queries are running.

If queries are running fine and appearing in Unravel UI, then you have successfully added the hive hooks configurations.

If queries are failing with a

class not founderror or permission problems:Undo the

hive-site.xmlchanges in Cloudera Manager.Deploy the hive client configuration.

Restart the Hive service.

Follow the steps in Troubleshooting.

In Cloudera Manager, select the target cluster and then click Spark.

Select Configuration.

Search for

spark-defaults.In Spark Client Advanced Configuration Snippet (Safety Valve) for spark-conf/spark-defaults.conf, enter the following text, replacing placeholders with your particular values:

spark.unravel.server.hostport=

unravel-host:<port>spark.driver.extraJavaOptions=-javaagent:/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jar=config=driver,libs=spark-versionspark.executor.extraJavaOptions=-javaagent:/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jar=config=executor,libs=spark-versionspark.eventLog.enabled=true<unravel-host>: Specify the Unravel hostname. In the case of multi-cluster deployment use the FQDN or logical hostname of the edge node forunravel-host.<Port>: 4043 is the default port. If you have customized the ports, you can specify that port number here.<spark-version>: Forspark-version, use a Spark version that is compatible with this version of Unravel. You can check the Spark version with the spark-submit --version command and specify the same version.

Click Save changes.

Click (

) or use the Actions pull-down menu to deploy the client configuration. Your spark-shell will ensure new JVM containers are created with the necessary extraJavaOptions for the Spark drivers and executors.

) or use the Actions pull-down menu to deploy the client configuration. Your spark-shell will ensure new JVM containers are created with the necessary extraJavaOptions for the Spark drivers and executors.Check Unravel UI to see if all Spark jobs are running.

If jobs are running and appearing in Unravel UI, you have deployed the Spark jar successfully.

If queries are failing with a

class not founderror or permission problems:Undo the

spark-defaults.confchanges in Cloudera Manager.Deploy the client configuration.

Investigate and fix the issue.

Follow the steps in Troubleshooting.

Note

If you have YARN-client mode applications, the default Spark configuration is not sufficient, because the driver JVM starts before the configuration set through the SparkConf is applied. For more information, see Apache Spark Configuration. In this case, configure the Unravel Sensor for Spark to profile specific Spark applications only (in other words, per-application profiling rather than cluster-wide profiling).

Impala properties are automatically configured. Refer to Impala properties for the list of properties that are automatically configured. If it is not set already by auto-configuration, set the properties as follows:

<Unravel installation directory>/manager config properties set <PROPERTY> <VALUE>

For example,

<Unravel installation directory>/manager config properties set com.unraveldata.data.source cm <Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.url http://my-cm-url<Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.usernamemycmname<Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.passwordmycmpassword

For multi-cluster, use the following format and set these on the edge node:

<Unravel installation directory>/manager config properties set com.unraveldata.data.source cm <Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.url http://my-cm-url<Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.usernamemycmname<Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.passwordmycmpassword

Note

By default, the Impala sensor task is enabled. To disable it, you can edit the following property as follows:

<Unravel installation directory>/manager config properties set com.unraveldata.sensor.tasks.disabled iw

Optionally, you can change the Impala lookback window. By default, when Unravel Server starts, it retrieves the last 5 minutes of Impala queries. To change this, do the following:

Change the value for com.unraveldata.cloudera.manager.impala.look.back.minutes property.

<Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.impala.look.back.minutes -<period>

For example: <Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.impala.look.back.minutes -7Note

Include a minus sign in front of the new value.

For more information on creating permanent functions, see Cloudera documentation.

This topic explains how to enable additional instrumentation on your gateway/edge/client nodes that are used to submit jobs to your big data platform. Additional instrumentation can include:

Hive queries in Hadoop that are pushed to Unravel Server by the Hive Hook sensor, a JAR file.

Spark job performance metrics that are pushed to Unravel Server by the Spark sensor, a JAR file.

Impala queries that are pulled from Cloudera Manager or the Impala daemon.

Sensor JARs packaged in a parcel on Unravel Server.

Tez Dag information is pushed to Unravel server by the Tez sensor, a JAR file.

Sensor JARs are packaged in a parcel on Unravel server. Run the following steps from the Cloudera Manager to download, distribute, and activate this parcel.

Note

Ensure that Unravel is up and running before you perform the following steps.

In Cloudera Manager, click

. The Parcel page is displayed.

. The Parcel page is displayed.On the Parcel page, click Configuration or Parcel Repositories & Network settings. The Parcel Configurations dialog box is displayed.

Go to the Remote Parcel Repository URLs section, click + and enter the Unravel host along with the exact directory name for your CDH version.

http://

<unravel-host>:<port>/parcels/<cdh <major:minor version>/For example: http://xyz.unraveldata.com:3000/parcels/cdh 7.1

<unravel-host>is the hostname or LAN IP address of Unravel. In a multi-cluster scenario, this would be the host where thelog_receiverdaemon is running.<port>is the Unravel UI port. The default is 3000. In case you have customized the default port, you can add that port number.<cdh-version>is your version of CDP. For example,cdh7.1.You can go to

http://directory (For example: http://xyznode46.unraveldata.com:3000/parcels) and copy the exact directory name of your CDH version (CDH<major.minor>).<unravel-host>:<port>/parcels/

Note

If you're using Active Directory Kerberos,

unravel-hostmust be a fully qualified domain name or IP address.Tip

If you're running more than one version of CDP (for example, you have multiple clusters), you can add more than one parcel entry for

unravel-host.Click Save Changes.

In the Cloudera Manager, click Check for new parcels find the

UNRAVEL_SENSORparcel that you want to distribute, and click the corresponding Download button.After the parcel is downloaded, click the corresponding Distribute button. This will distribute the parcel to all the hosts.

After the parcel is distributed, click the corresponding Activate button. The status column will now display Distributed, Activated.

Note

If you have an old sensor parcel from Unravel, you must deactivate it now.

In Cloudera Manager, select the target cluster from the drop-down, click Hive on Tez >Configuration, and search for

Service Environment.In Hive on Tez Service Environment Advanced Configuration Snippet (Safety Valve) enter the following exactly as shown, with no substitutions:

AUX_CLASSPATH=${AUX_CLASSPATH}:/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/unravel_hive_hook.jarEnsure that the Unravel hive hook JAR has the read/execute access for the user running the hive server.

In Cloudera Manager, select the target cluster from the drop-down, click Oozie >Configuration and check the path shown in ShareLib Root Directory.

From a terminal application on the Unravel node (edge node in case of multi-cluster.), pick up the ShareLib Root Directory directory path with the latest timestamp.

hdfs dfs -ls

<path to ShareLib directory>// For example: hdfs dfs -ls /user/oozie/share/lib/Important

The jars must be copied to the

libdirectory (with the latest timestamp), which is shown inShareLib Root Directory.Copy the Hive Hook JAR

/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/unravel_hive_hook.jarand the Btrace JAR,/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jarto the specified path in ShareLib Root Directory.For example, if the path specified in ShareLib Root Directory. is

/user/oozie, run the following commands to copy the JAR files.hdfs dfs -copyFromLocal /opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/unravel_hive_hook.jar /user/oozie/share/lib/

<latest timestamp lib directory>/ //For example: hdfs dfs -copyFromLocal /opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/unravel_hive_hook.jar /user/oozie/share/lib/lib_20210326035616/hdfs dfs -copyFromLocal /opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jar /user/oozie/share/lib/

<latest timestamp lib directory>/ //For example: hdfs dfs -copyFromLocal /opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jar /user/oozie/share/lib/lib_20210326035616/From a terminal application, copy the Hive Hook JAR

/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/unravel_hive_hook.jarand the Btrace JAR,/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jarto the specified path in ShareLib Root Directory.Caution

Jobs controlled by Oozie 2.3+ fail if you do not copy the Hive Hook and BTrace JARs to the HDFS shared library path.

On the Cloudera Manager, go to Tez > configuration and search the following properties:

tez.am.launch.cmd-opts

tez.task.launch.cmd-opts

Append the following to tez.am.launch.cmd-opts and tez.task.launch.cmd-opts properties:

-javaagent:/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jar=libs=mr,config=tez -Dunravel.server.hostport=

<unravel_host>:4043Note

For

unravel-host, specify the FQDN or the logical hostname of Unravel or of the edge node in the case of multi-cluster.Click the Stale configurations icon (

) to deploy the client configuration and restart the Tez services.

) to deploy the client configuration and restart the Tez services.

On the Cloudera Manager, click Hive on Tez > Configuration tab.

Search for

hive-site.xml, which will lead to the Hive Client Advanced Configuration Snippet (Safety Valve) for hive-site.xml section.Specify the hive hook configurations. You can either use the XML text field or Editor to specify the hive hook configuration.

Option 1: XML text field

Click View as XML to open the XML text field and copy-paste the following.

<property> <name>com.unraveldata.host</name> <value>

<UNRAVEL HOST NAME></value> <description>Unravel hive-hook processing host</description> </property> <property> <name>com.unraveldata.hive.hook.tcp</name> <value>true</value> </property> <property> <name>com.unraveldata.hive.hdfs.dir</name> <value>/user/unravel/HOOK_RESULT_DIR</value> <description>destination for hive-hook, Unravel log processing</description> </property> <property> <name>hive.exec.driver.run.hooks</name> <value>com.unraveldata.dataflow.hive.hook.UnravelHiveHook</value> <description>for Unravel, from unraveldata.com</description> </property> <property> <name>hive.exec.pre.hooks</name> <value>com.unraveldata.dataflow.hive.hook.UnravelHiveHook</value> <description>for Unravel, from unraveldata.com</description> </property> <property> <name>hive.exec.post.hooks</name> <value>com.unraveldata.dataflow.hive.hook.UnravelHiveHook</value> <description>for Unravel, from unraveldata.com</description> </property> <property> <name>hive.exec.failure.hooks</name> <value>com.unraveldata.dataflow.hive.hook.UnravelHiveHook</value> <description>for Unravel, from unraveldata.com</description> </property>Ensure to replace

UNRAVEL HOST NAMEwith the Unravel hostname. Replace TheUnravel Host Namewith the hostname of the edge node in case of a multi-cluster deployment.Option 2: Editor:

Click + and enter the property, value, and description (optional).

Property

Value

Description

com.unraveldata.host

Replace with Unravel hostname or with the hostname of the edge node in case of a multi-cluster deployment.

Unravel hive-hook processing host

com.unraveldata.hive.hook.tcp

true

Hive hook tcp protocol.

com.unraveldata.hive.hdfs.dir

/user/unravel/HOOK_RESULT_DIR

Destination directory for hive-hook, Unravel log processing.

hive.exec.driver.run.hooks

com.unraveldata.dataflow.hive.hook.UnravelHiveHook

Hive hook

hive.exec.pre.hooks

com.unraveldata.dataflow.hive.hook.UnravelHiveHook

Hive hook

hive.exec.post.hooks

com.unraveldata.dataflow.hive.hook.UnravelHiveHook

Hive hook

hive.exec.failure.hooks

com.unraveldata.dataflow.hive.hook.UnravelHiveHook

Hive hook

Similarly, ensure to add the same hive hook configurations in HiveServer2 Advanced Configuration Snippet (Safety Valve) for hive-site.xml.

Optionally, add a comment in Reason for change and then click Save Changes.

From the Cloudera Manager page, Click the Stale configurations icon (

) to deploy the configuration and restart the Hive services.

) to deploy the configuration and restart the Hive services.Check Unravel UI to see if all Hive queries are running.

If queries are running fine and appearing in Unravel UI, then you have successfully added the hive hooks configurations.

If queries are failing with a

class not founderror or permission problems:Undo the

hive-site.xmlchanges in Cloudera Manager.Deploy the hive client configuration.

Restart the Hive service.

Follow the steps in Troubleshooting.

In Cloudera Manager, select the target cluster, click Kafka service > Configuration, and search for

broker_java_opts.In Additional Broker Java Options enter the following:

-server -XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35 -XX:G1HeapRegionSize=16M -XX:MinMetaspaceFreeRatio=50 -XX:MaxMetaspaceFreeRatio=80 -XX:+DisableExplicitGC -Djava.awt.headless=true -Djava.net.preferIPv4Stack=true -Dcom.sun.management.jmxremote.local.only=true -Djava.rmi.server.useLocalHostname=true -Dcom.sun.management.jmxremote.rmi.port=9393

Click Save Changes.

In Cloudera Manager, select the target cluster and then click Spark.

Select Configuration.

Search for

spark-defaults.In Spark Client Advanced Configuration Snippet (Safety Valve) for spark-conf/spark-defaults.conf, enter the following text, replacing placeholders with your particular values:

spark.unravel.server.hostport=

unravel-host:portspark.driver.extraJavaOptions=-javaagent:/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jar=config=driver,libs=spark-versionspark.executor.extraJavaOptions=-javaagent:/opt/cloudera/parcels/UNRAVEL_SENSOR/lib/java/btrace-agent.jar=config=executor,libs=spark-versionspark.eventLog.enabled=true<unravel-host>: Specify the Unravel hostname. In the case of multi-cluster deployment use the FQDN or logical hostname of the edge node forunravel-host.<Port>: 4043 is the default port. If you have customized the ports, you can specify that port number here.<spark-version>: Forspark-version, use a Spark version that is compatible with this version of Unravel. You can check the Spark version with the spark-submit --version command and specify the same version.

Click Save changes.

Click the Stale configurations icon (

) to deploy the client configuration and restart the Spark services. Your spark-shell will ensure new JVM containers are created with the necessary extraJavaOptions for the Spark drivers and executors.

) to deploy the client configuration and restart the Spark services. Your spark-shell will ensure new JVM containers are created with the necessary extraJavaOptions for the Spark drivers and executors.Check Unravel UI to see if all Spark jobs are running.

If jobs are running and appearing in Unravel UI, you have deployed the Spark jar successfully.

If queries are failing with a

class not founderror or permission problems:Undo the

spark-defaults.confchanges in Cloudera Manager.Deploy the client configuration.

Investigate and fix the issue.

Follow the steps in Troubleshooting.

Note

If you have YARN-client mode applications, the default Spark configuration is not sufficient, because the driver JVM starts before the configuration set through the SparkConf is applied. For more information, see Apache Spark Configuration. In this case, configure the Unravel Sensor for Spark to profile specific Spark applications only (in other words, per-application profiling rather than cluster-wide profiling).

Impala properties are automatically configured. Refer to Impala properties for the list of properties that are automatically configured. If it is not set already by auto-configuration, set the properties as follows:

<Unravel installation directory>/manager config properties set <PROPERTY> <VALUE>

For example,

<Unravel installation directory>/manager config properties set com.unraveldata.data.source cm <Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.url http://my-cm-url<Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.usernamemycmname<Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.passwordmycmpassword

For multi-cluster, use the following format and set these on the edge node:

<Unravel installation directory>/manager config properties set com.unraveldata.data.source cm <Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.url http://my-cm-url<Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.usernamemycmname<Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.passwordmycmpassword

Note

By default, the Impala sensor task is enabled. To disable it, you can edit the following property as follows:

<Unravel installation directory>/manager config properties set com.unraveldata.sensor.tasks.disabled iw

Optionally, you can change the Impala lookback window. By default, when Unravel Server starts, it retrieves the last 5 minutes of Impala queries. To change this, do the following:

Change the value for com.unraveldata.cloudera.manager.impala.look.back.minutes property.

<Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.impala.look.back.minutes -<period>

For example: <Unravel installation directory>/manager config properties set com.unraveldata.cloudera.manager.impala.look.back.minutes -7Note

Include a minus sign in front of the new value.

For more information on creating permanent functions, see Cloudera documentation.

This topic explains how to configure Unravel to retrieve additional data from Hive, Tez, Spark, and Oozie, such as Hive queries, application timelines, Spark jobs, YARN resource management data, and logs. You can do this by generating Unravel's JARs and distributing them to every node that runs queries in the cluster. Later, after the JARs are distributed to the nodes, you can integrate Hive, Tez, and Spark data with Unravel.

Hive configurations

Import the hive hook sensor jar into the classpath

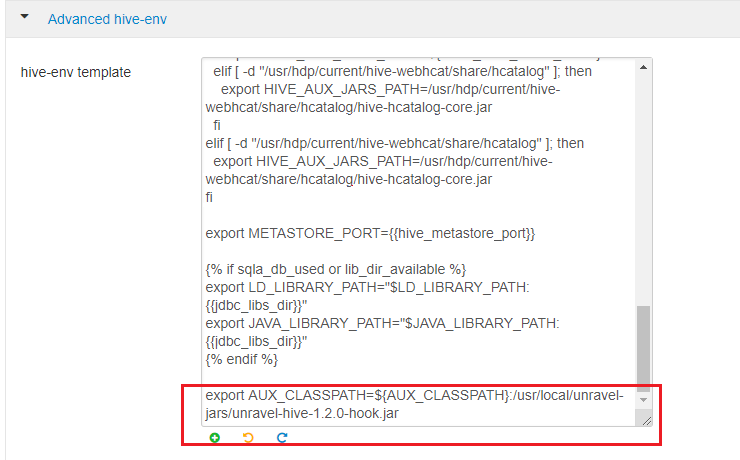

On the Ambari UI, click Hive > Configs > Advanced > Advanced hive-env. In the hive-env template, towards the end of line, add:

export AUX_CLASSPATH=${AUX_CLASSPATH}:<path to unravel hive hook sensor jar>/unravel-hive-<version>-hook.jarFor example:

export AUX_CLASSPATH=${AUX_CLASSPATH}:/usr/local/unravel-jars/unravel-hive-1.2.0-hook.jar

Configure hive hook

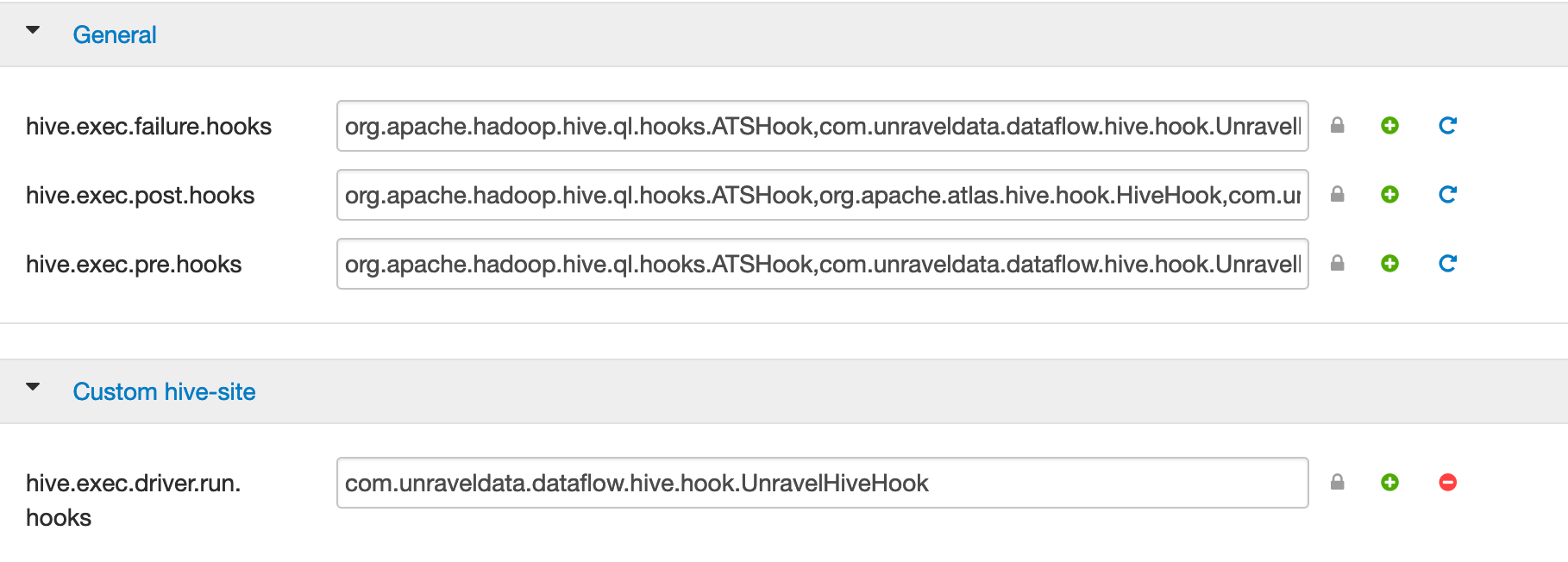

On the Ambari UI, click Hive > Configs > Advanced. In the General section, search for the following hive hooks:

hive.exec.failure.hooks hive.exec.post.hooks hive.exec.pre.hooks hive.exec.run.hooks Copy the

,com.unraveldata.dataflow.hive.hook.UnravelHiveHookproperty against each of the hooks.Important

Be sure to append with no space before or after the comma, for example, property=existingValue,newValue

For example:

hive.exec.failure.hooks=

existing-value,com.unraveldata.dataflow.hive.hook.UnravelHiveHook hive.exec.post.hooks=existing-value,com.unraveldata.dataflow.hive.hook.UnravelHiveHook hive.exec.pre.hooks=existing-value,com.unraveldata.dataflow.hive.hook.UnravelHiveHook hive.exec.run.hooks=existing-value,com.unraveldata.dataflow.hive.hook.UnravelHiveHookIn case you do not find these hive hooks, go to the Custom hive-site section, click Add Property and add these as key and value per line in the Properties text box.

For example:

hive.exec.pre.hooks=com.unraveldata.dataflow.hive.hook.UnravelHiveHook

Similarly, in the Custom hive-site section. ensure to set com.unraveldata.host: to the edge node's hostname.

Configure HDFS

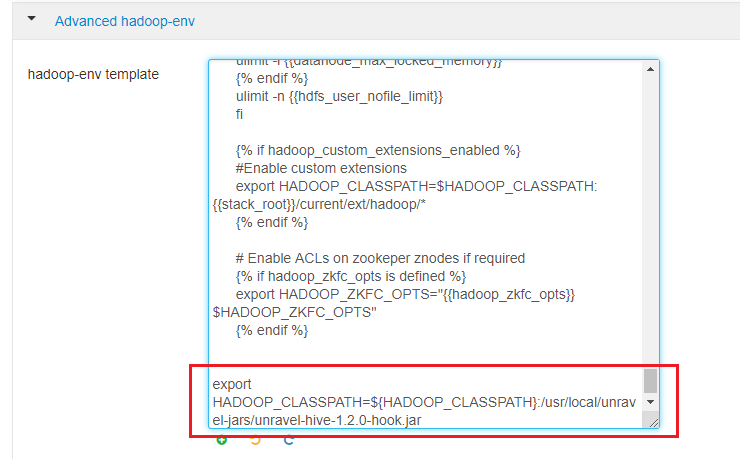

Click HDFS > Configs > Advanced > Advanced hadoop-env. In the hadoop-env template, look for export HADOOP_CLASSPATH and append Unravel's JAR path as shown.

export HADOOP_CLASSPATH=${HADOOP_CLASSPATH}:<Unravel sensor installation directory>/unravel-hive-<version>-hook.jarFor example:

export HADOOP_CLASSPATH=${HADOOP_CLASSPATH}:/opt/unravel-hive-1.2.0-hook.jar

Configure the BTrace agent for Tez

From the Ambari UI, go to Tez > config > Advanced and in the General section, append the Java options below to tez.am.launch.cmd-opts and tez.task.launch.cmd-opts:

-javaagent:

<Unravel installation directory>/unravel-jars/jars/btrace-agent.jar=libs=mr,config=tez -Dunravel.server.hostport=<unravel-host>:4043<unravel-host>should be the hostname of the Unravel edge node. For example: abcd.unraveldata.comTip

In a Kerberos environment, you need to modify tez.am.view-acls property with *.

Configure the Application Timeline Server (ATS)

Note

From Unravel v4.6.1.6, this step is not mandatory.

In

yarn-site.xml:yarn.timeline-service.enabled=true yarn.timeline-service.entity-group-fs-store.group-id-plugin-classes=org.apache.tez.dag.history.logging.ats.TimelineCachePluginImpl yarn.timeline-service.version=1.5 or yarn.timeline-service.versions=1.5f,2.0f

If yarn.acl.enable is

true, addunravelto yarn.admin.acl.In

hive-env.sh, add:Use ATS Logging: true

In

tez-site.xml, add:tez.dag.history.logging.enabled=true tez.am.history.logging.enabled=true tez.history.logging.service.class=org.apache.tez.dag.history.logging.ats.ATSV15HistoryLoggingService tez.am.view-acls=*

If

tez-site.xmlis not available, you can also add these properties from the Ambari UI. Go to Tez > config > Custom tez-site and add the above properties as key and value per line in the Properties text box.Note

From HDP version 3.1.0 onwards, this Tez configuration must be done manually.

Configure Spark-on-Yarn

Tip

For

unravel-host, use Unravel Server's fully qualified domain name (FQDN) or IP address.Add the location of the Spark JARs.

Click Spark > Configs > Custom spark-defaults > Add Property and use

Bulk property add mode, or edit

Bulk property add mode, or edit spark-defaults.confas follows:Tip

If your cluster has only one Spark 1.X version,

spark-defaults.confis in/usr/hdp/current/spark-client/conf.If your cluster is running Spark 2.X,

spark-defaults.confis in/usr/hdp/current/spark2-client/conf.

This example uses default locations for Spark JARs. Your environment may vary.

spark.unravel.server.hostport=

unravel-host:4043 spark.driver.extraJavaOptions=-javaagent:/usr/local/unravel-jars/jars/btrace-agent.jar=config=driver,libs=<spark-version>spark.executor.extraJavaOptions=-javaagent:/usr/local/unravel-jars/jars/btrace-agent.jar=config=executor,libs=<spark-version>spark.eventLog.enabled=trueFor example: