Part 1: Installing Unravel Server on CDH+CM

This topic explains how to deploy Unravel Server on Cloudera Distribution of Hadoop (CDH). Your CDH environment must be running Cloudera Manager (CM).

1. Confirm that your CDH cluster meets Unravel's platform requirements

For details, see Prerequisites.

2. Configure the host

Use Cloudera Manager to allocate a cluster gateway/edge/client host with HDFS access, and create a gateway configuration for the host. The gateway configuration must have client roles for HDFS, YARN, Spark, Hive, and optionally, Spark2.

3. Install MySQL

Complete the [Before Installing Unravel RPM] steps in Install and configure MySQL for Unravel.

4. Install Unravel Server on the host

Download the Unravel Server RPM.

Ensure that the host machine's local disks have the minimum space required.

Unravel Server uses two separate disks: one for binaries (

/usr/local/unravel) and one for data (/srv/unravel). The separate disk/srv/unravelis beneficial for performance. If either disk doesn't have the minimum space required, create symbolic links for them to another disk drive.Tip

To check the space on a volume use the df command. For example,

df -h /srv

Install the Unravel Server RPM.

sudo rpm -ivf unravel-

version.rpm*

The installation creates the following items:

/usr/local/unravel/, which contains executables, scripts, properties file (unravel.properties), and logs./srv/unravel/, which contains the internal database and other persistent states. You can replace the internal database with an externally managed MySQL for production./etc/init.d/unravel_*, which contains scripts for controlling services, such asunravel_all.shfor manually stopping, starting, and getting the status of all daemons in proper order.User

unravelif it doesn't exist already.

5. Configure MySQL

Complete the [After Installing Unravel RPM] steps in Install and configure MySQL for Unravel.

6. Configure Unravel Server with basic options

(Optional) Enable additional daemons for high-volume workloads.

In

/usr/local/unravel/etc/unravel.properties, set general properties for Unravel Server.Point Unravel Server to logs on HDFS.

Unravel collects HDFS logs for analysis. To point Unravel Server to these logs, set the following properties in

/usr/local/unravel/etc/unravel.properties:For example,

com.unraveldata.job.collector.done.log.base=/user/history/done com.unraveldata.job.collector.log.aggregation.base=/tmp/logs com.unraveldata.spark.eventlog.location=hdfs://user/spark/applicationHistory,hdfs://user/spark/spark2

To confirm that you have the right path, use the

hdfs dfs -lscommand. For example,hdfs dfs -ls /user/history/done hdfs dfs -ls /tmp/logs

If Kerberos is enabled, create or identify a principal and keytab for Unravel daemons to use for access to HDFS and the REST API.

If Sentry is enabled:

Create your own alternate principal with narrow privileges and HDFS access permissions.

Verify that the user running the Unravel daemon

/etc/unravel_ctlhas the permissions shown in the table below.Resource

Principal

Permission

Purpose

hdfs://user/spark/applicationHistoryYour alt principal

read+execute

Spark event log

hdfs://user/spark/spark2ApplicationHistoryYour alt principal

read+execute

Spark2 event log (if Spark2 is installed)

hdfs://user/historyYour alt principal

read+execute

MapReduce logs

hdfs://tmp/logsYour alt principal

read+execute

YARN aggregation folder

hdfs://user/hive/warehouseYour alt principal

read+execute

Obtain table partition sizes with "stat" only

7. Change the run-as user and group for Unravel daemons

9. Start Unravel services

Run the following command to start all Unravel services:

sudo /etc/init.d/unravel_all.sh start sleep 60

This completes the basic/core configuration.

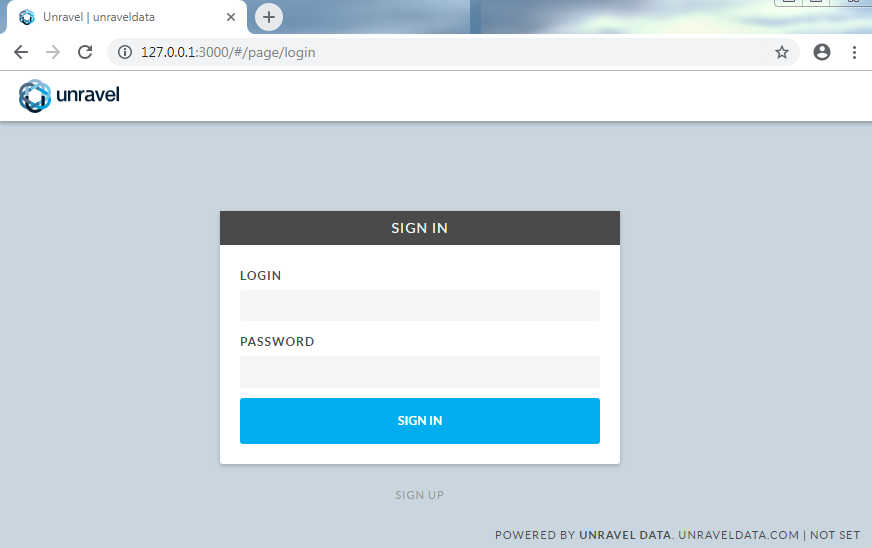

10. Log into Unravel UI

Find the hostname of Unravel Server.

echo "http://$(hostname -f):3000/"

If you're using an SSH tunnel or HTTP proxy, you might need to make adjustments.

Using a supported web browser, navigate to

http://and log in with usernameunravel-host:3000adminwith passwordunraveldata.