Part 2: Enabling Additional Instrumentation

This topic explains how to configure Unravel to retrieve additional data from Hive, Tez, Spark and Oozie, such as Hive queries, application timelines, Spark jobs, YARN resource management data, and logs.

1. Generate and Distribute Unravel's Hive Hook and Spark Sensor JARs

In order to enable additional instrumentation, first generate Unravel's JARs and distribute them to every edge in the cluster which runs queries. Later, after JARs are distributed to the nodes, we'll walk you through integrating Hive, Tez, and Spark with Unravel.

On Unravel Server, log in as

rootand confirm that wget is installed.yum install -y wget

Run the following commands:

mkdir /usr/local/unravel-jars chmod 775 -R /usr/local/unravel-jars/ chown root:hadoop /usr/local/unravel-jars/ cd /usr/local/unravel/install_bin/cluster-setup-scripts/ chmod +x /usr/local/unravel/install_bin/cluster-setup-scripts/unravel_hdp_setup.py sudo python2 unravel_hdp_setup.py --sensor-only --unravel-server

unravel-host:3000 --spark-versionspark-version--hive-versionhive-version--ambari-serverambari-hostcp /usr/local/unravel_client/unravel-hive-1.2.0-hook.jar /usr/local/unravel-jars/ cp -pr /usr/local/unravel-agent/jars/* /usr/local/unravel-jars/Tip

For

unravel-host, specify the protocol (http or https) and use the fully qualified domain name (FQDN) or IP address of Unravel Server. For example,https://playground3.unraveldata.com:3000.Files are installed locally in these two directories:

/usr/local/unravel_client(Hive Hook JAR)/usr/local/unravel-agent/jars/(Resource metrics sensor JARs)

Make a note of these directories because you'll need to specify them in Ambari.

Distribute

/usr/local/unravel_clientand/usr/local/unravel-agent/jars/to all worker, edge, and master nodes which run queries.For example,

scp -r /usr/local/unravel_client/ root@

every-cluster-host:/usr/local/unravel-jars scp -r /usr/local/unravel-agent/ root@every-cluster-host:/usr/local/unravel-jarsOn each instrumented node, do the following:

Make sure the node can reach port 4043 of Unravel Server.

Add Unravel's binaries to the

*CLASSPATHenvironment variables.export AUX_CLASSPATH=${AUX_CLASSPATH}:/usr/local/unravel-jars/unravel-hive-1.2.0-hook.jar export HADOOP_CLASSPATH=${HADOOP_CLASSPATH}:/usr/local/unravel-jars/unravel-hive-1.2.0-hook.jar

2. For Oozie, Copy the Hive Hook and BTrace JARs to the HDFS Shared Library Path

Copy the Hive Hook JAR, /usr/local/unravel_client/unravel-hive-1.2.0-hook.jar and the Btrace JAR, /usr/local/unravel-agent/jars/btrace-agent.jar to the HDFS shared library path specified by oozie.libpath. If you don't do this, jobs controlled by Oozie 2.3+ will fail.

3. Add Unravel's Binaries to CLASSPATH in Ambari

Adjust Hive and Hadoop configurations in Ambari to allow these JARs to be picked up by Hive Hook and Hadoop.

Add

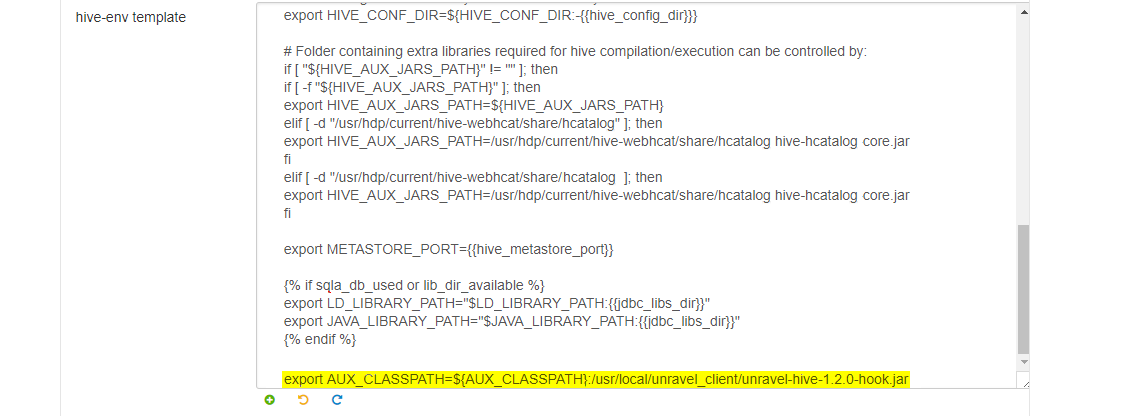

AUX_CLASSPATHto Ambari's hive-env template:In Ambari, click Hive | Configs | Advanced | Advanced hive-env.

Inside the Advanced hive-env template, towards the end of line, add:

export AUX_CLASSPATH=${AUX_CLASSPATH}:/usr/local/unravel-jars/unravel-hive-1.2.0-hook.jar

Add

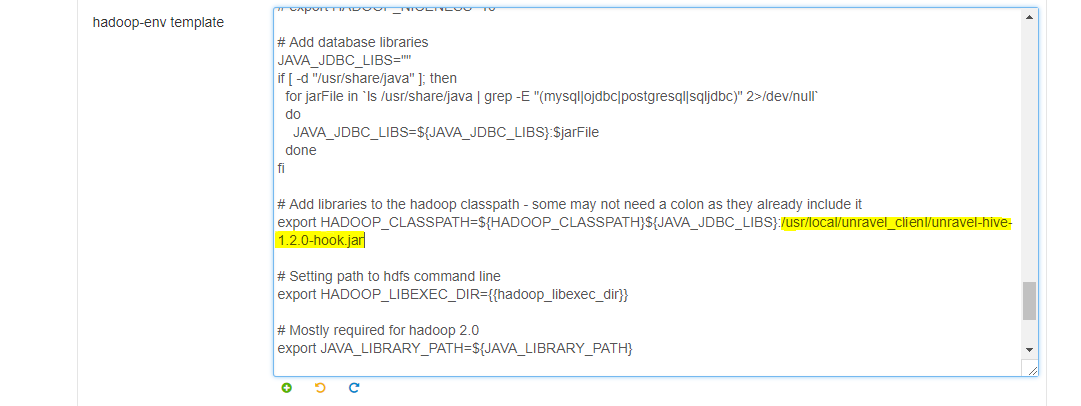

HADOOP_CLASSPATHto Ambari's hadoop-env template:In Ambari, click HDFS | Configs | Advanced | Advanced hadoop-env.

Inside the hadoop-env template, look for export HADOOP_CLASSPATH and append Unravel's JAR path:

export HADOOP_CLASSPATH=${HADOOP_CLASSPATH}:/usr/local/unravel-jars/unravel-hive-1.2.0-hook.jar

4. Connect Unravel Server to Hive

Warning

Completion of this step requires a restart of all affected Hive services in Ambari Web UI.

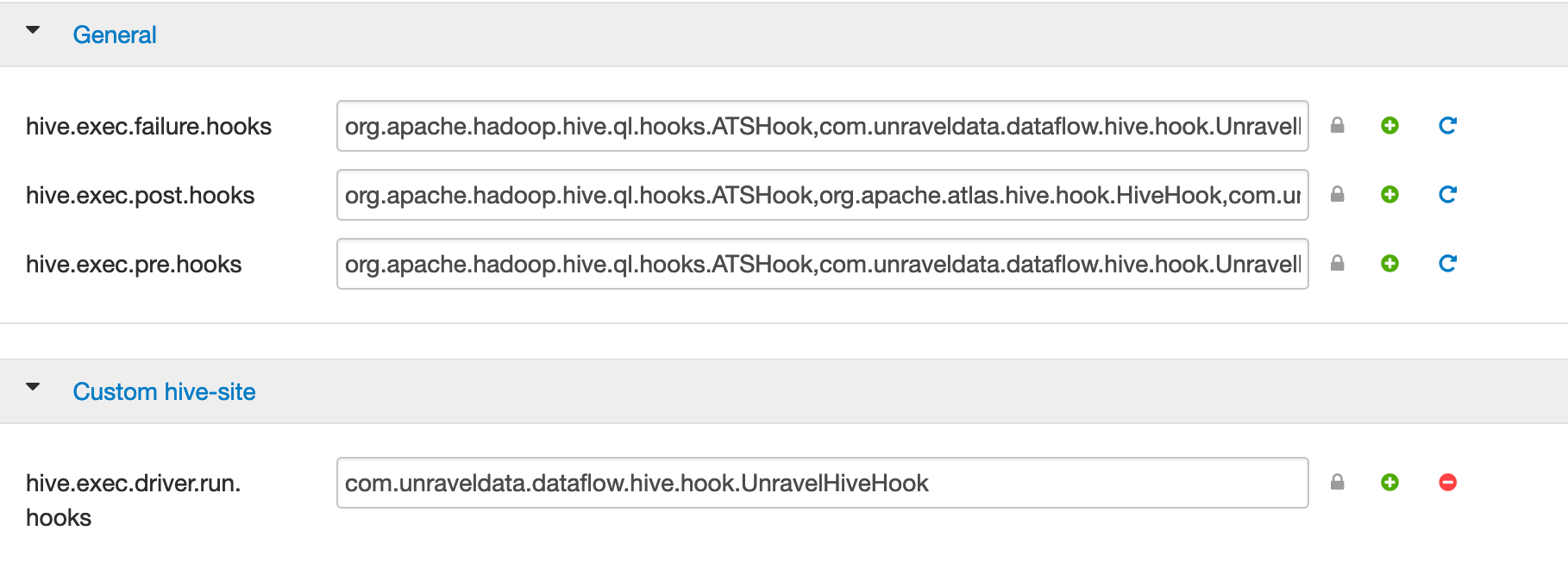

In Ambari's general properties, append a comma with no space, followed by

com.unraveldata.dataflow.hive.hook.UnravelHiveHook, to the following properties:hive.exec.failure.hooks hive.exec.post.hooks hive.exec.pre.hooks For example,

hive.exec.pre.hooks=

existing-value,com.unraveldata.dataflow.hive.hook.UnravelHiveHook hive.exec.post.hooks=existing-value,com.unraveldata.dataflow.hive.hook.UnravelHiveHook hive.exec.failure.hooks=existing-value,com.unraveldata.dataflow.hive.hook.UnravelHiveHook

In Ambari's custom hive-site editor, do the following:

Add a property, hive.exec.driver.run.hooks, and set its value to

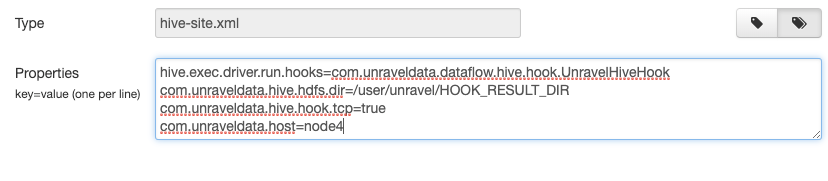

hive.exec.driver.run.hookSet com.unraveldata.host: to

unravel-gateway-internal-IP-hostnameSet com.unraveldata.hive.hook.tcp to

trueIf you have an Unravel version older than 4.5.1.0, set com.unraveldata.hive.hdfs.dir to

/user/unravel/HOOK_RESULT_DIR.

For example,

If LLAP is enabled, copy the above settings in Custom hive-interactive-site to

/etc/hive/conf/hive-site.xml.Tip

Edit hive-site.xml manually, not through Ambari Web UI.

If LLAP is enabled, copy the settings from Advanced hive-interactive-env to

/etc/hive/conf/hive-site.xml.Tip

Edit

hive-site.xmlmanually, not through Ambari Web UI.If you have an Unravel version older than 4.5.1.0, create an HDFS Hive hook directory for Unravel:

hdfs dfs -mkdir -p /user/unravel/HOOK_RESULT_DIR hdfs dfs -chown unravel:hadoop /user/unravel/HOOK_RESULT_DIR hdfs dfs -chmod -R 777 /user/unravel/HOOK_RESULT_DIR

5. Configure the Application Timeline Server (ATS)

If you're not running Tez, skip this step.

If ATS requires authentication, set these properties iIn

/usr/local/unravel/etc/unravel.properties:In Ambari, add these settings:

In

yarn-site.xml:yarn.timeline-service.enabled: true yarn.timeline-service.entity-group-fs-store.group-id-plugin-classes: org.apache.tez.dag.history.logging.ats.TimelineCachePluginImpl

If yarn.acl.enable is

true, addunravelto yarn.admin.acl.In

hive-site.xml, append these values to the following parameters:hive.exec.failure.hooks: org.apache.hadoop.hive.ql.hooks.ATSHook hive.exec.post.hooks: org.apache.hadoop.hive.ql.hooks.ATSHook hive.exec.pre.hooks: org.apache.hadoop.hive.ql.hooks.ATSHook

In

hive-env.sh, add:Use ATS Logging: true

In

tez-site.xml, add:tez.history.logging.service.class: org.apache.tez.dag.history.logging.ats.ATSV15HistoryLoggingService tez.am.view-acls:

unravel-"run-as"-user or *

In Ambari, restart all services whose components you changed.

6. Connect Unravel Server to Tez

If you're not running Tez, skip this step.

Add these properties to

/usr/local/unravel/etc/unravel.properties:Confirm that hive.execution.engine is set to

tez.set hive.execution.engine=tez;

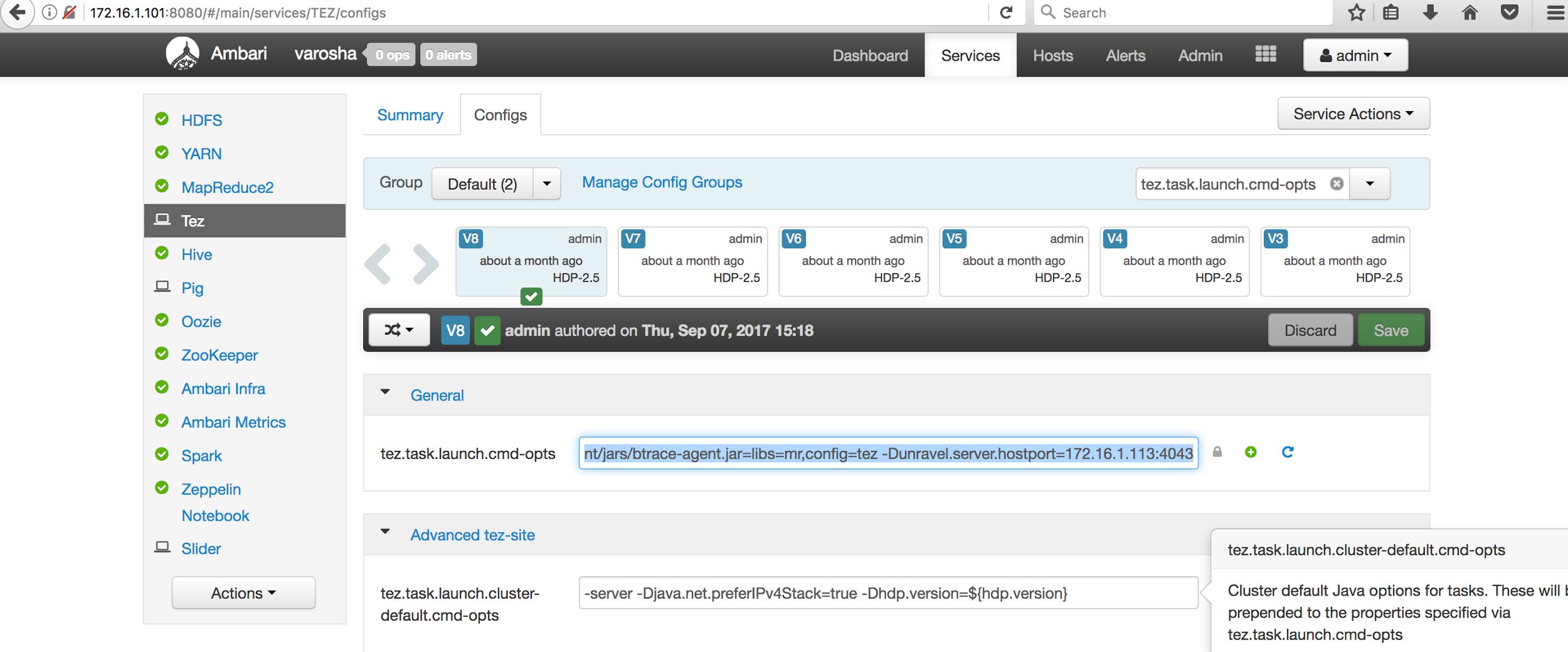

Using the Ambari, configure the Btrace agent for Tez:

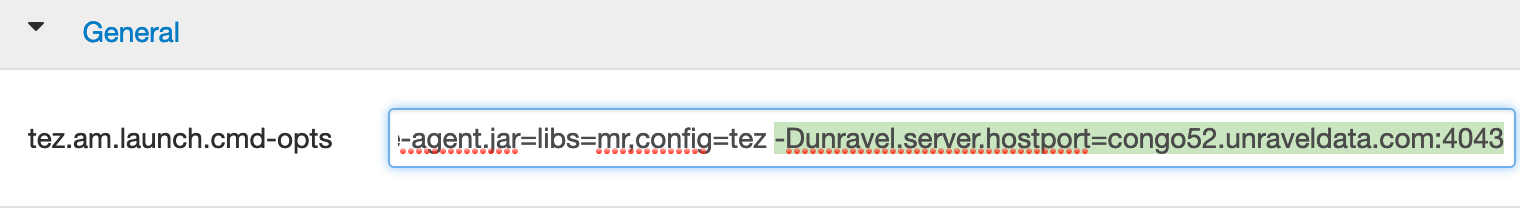

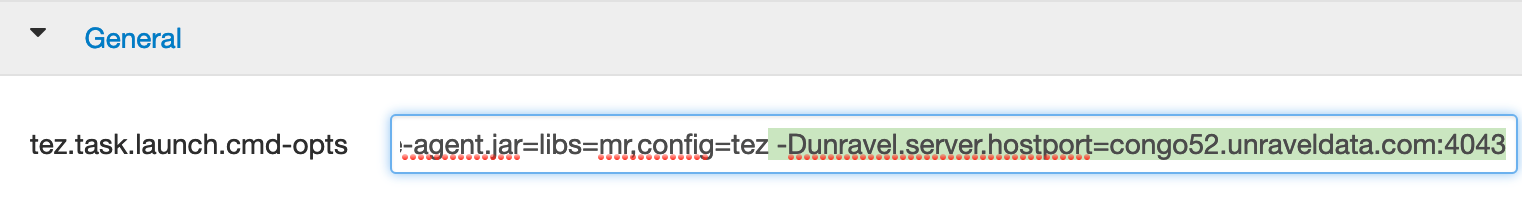

Append the Java options below to tez.am.launch.cmd-opts and tez.task.launch.cmd-opts:

-javaagent:/usr/local/unravel-agent/jars/btrace-agent.jar=libs=mr,config=tez -Dunravel.server.hostport=

unravel-host:4043For example,

Restart the affected component(s). The screenshot below illustrates this change.

Note

In a Kerberos environment you need to modify tez.am.view-acls property with the "run as" user or *.

Confirm that Unravel UI shows Tez data.

Run

/usr/local/unravel/install_bin/hive_test_simple.shon the HDP cluster or on any cloud environment wherehive.execution.engine=tez.Check Unravel UI for Tez data.

Unravel UI may take a few seconds to load Tez data.

In Ambari, restart all affected Hive services.

7. (Optional) Connect Unravel Server to Spark-on-YARN

Warning

Completion of this step requires a restart of all affected Spark services in Ambari UI.

Tip

For unravel-host, use Unravel Server's fully qualified domain name (FQDN) or IP address.

Tell Ambari where the Spark JARs are:

In Ambari Web UI, on the left side, click Spark | Configs | Custom spark-defaults | Add Property and use

Bulk property add mode, or edit

Bulk property add mode, or edit spark-defaults.confas follows:Tip

If your cluster only has one Spark 1.X version,

spark-defaults.confis in/usr/hdp/current/spark-client/conf.If your cluster is running Spark 2.X,

spark-defaults.confis in/usr/hdp/current/spark2-client/conf.

The example below uses default locations for Spark JARs. Your environment may vary.

spark.unravel.server.hostport=

unravel-host:4043 spark.driver.extraJavaOptions=-javaagent:/usr/local/unravel-agent/jars/btrace-agent.jar=config=driver,libs=spark-versionspark.executor.extraJavaOptions=-javaagent:/usr/local/unravel-agent/jars/btrace-agent.jar=config=executor,libs=spark-versionspark.eventLog.enabled=trueEnable Spark streaming.

Spark streaming is disabled by default. To enable it, edit

spark-defaults.confas follows.Search for

spark.driver.extraJavaOptionsand set it to the following value. Be sure to substitute the correct version of Spark forspark-version.spark.driver.extraJavaOptions=-javaagent:${unravel-sensor-path}/btrace-agent.jar=script=DriverProbe.class:SQLProbe.class:StreamingProbe.class,libs=spark-versionNote

The value of spark.driver.extraJavaOptions changes based on whether Spark streaming is enabled or disabled.

Unravel supports the Spark streaming feature for Spark 1.6.x, 2.0.x, 2.1.x and 2.2.x only.

Unravel has limited support for Spark apps using the Structured Streaming API introduced in Spark2.

Next Steps

For additional configuration and instrumentation options, see Next steps.