Data Insights

The first two (2) tabs provide data level insights including a snapshot of tables and partitions over the last 24 hours within a historical context.

Overview - gives a quick view into the tables' and partitions' sizes.

Details - drills down into the tables.

See Hive Metastore Configuration for information on necessary configuration settings to populate these tabs. Tables and partitions have color coded labels when applicable: Hot ( ), Warm ( ), Warm ( ), or Cold ( ), or Cold ( ). The label definitions are defined via the Configuration. ). The label definitions are defined via the Configuration. |

The last four (4) provide disk management insights to help you manage your disk usage both in terms of capacity and cluster performance.

Note

In order to use Forecasting, Small Files, and File Reports you must have the OnDemand package installed.

As of 4.5.1 Top X has moved to the Operational Insights tab.

Forecasting - forecasts future disk capacity needs based upon past performance.

Small Files - generates a list of small files based upon specified criteria.

File Reports - similar to Small Files, except canned reports for large, medium, tiny, and empty files.

Note

Click here for common features used throughout Unravel's UI.

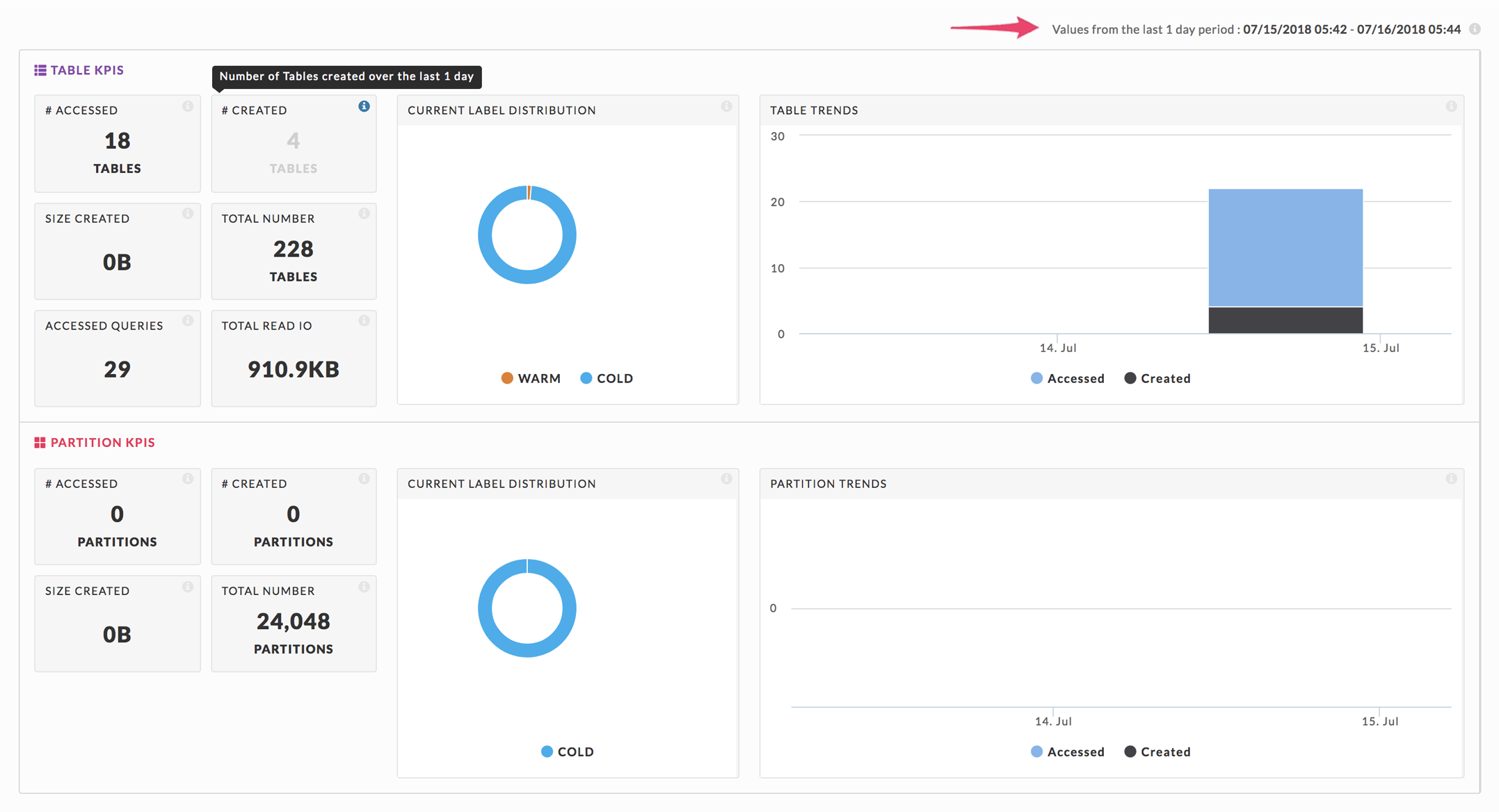

Overview

The Overview Dashboard gives a quick view into the tables' and partitions' sizes, usage, and KPIs. It has two (2) sections.

Table KPI

Partition

The time period used to populate the page is noted in the upper right-hand corner and the tool tips.

Tables & Partitions Tiles

|

Both Table and Partition KPIs sections contain:

# Accessed: Number of Tables/Partitions accessed,

# Created: Number of Tables/Partitions created,

Size Created: Size of Tables/Partitions created, and

Total Number: Total Number of Tables/Partitions currently in the system.

The Table KPIs also contains:

Accessed Queries: Total number of queries accessing the tables, and

Total Read IO: Total Read IO due to accessing the tables.

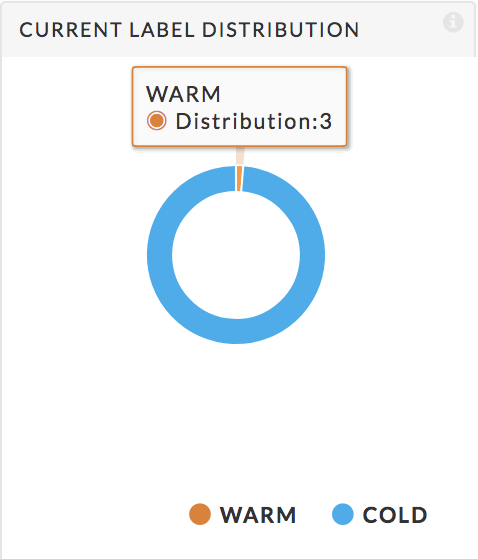

Donut Chart

These display the Current Label Distribution for the tables/partitions. See Configuration for the operating definition of the labels. The graph shows the relationship between the labels; hover over a label to see the total of tables/partitions with that label. Below we see that three (3) tables are warm.

|

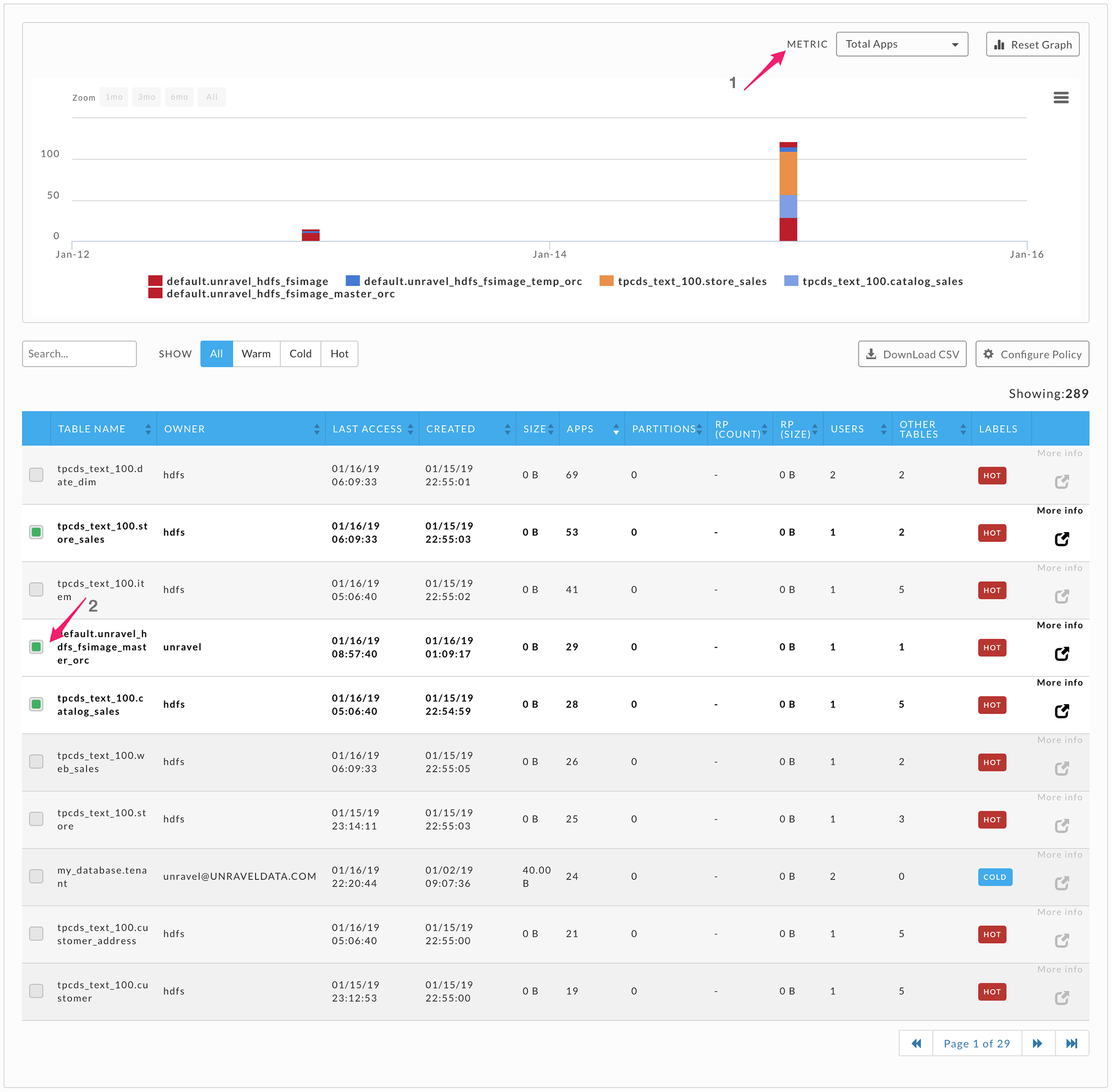

Details

The details tab has two sections, a graph and a table list. By default, the graph uses the Total Users metric and displays the first table in the list. The list is sorted on Total Users in descending order. You can also use the metrics Total Users, Total Apps, or Total Size to display the graph. The Total Apps metric (corresponding Apps column in the table) is the total number of Hive and Impala queries on the table.

Graph

Use the Metric pull down menu (1) to select Total Users, Total Apps, or Total Size as the metric to graph. Click on Reset Graph (1) to revert to displaying first table using the Total Users metric. The menu bars allow you to print or download the graph. You can select one or more tables to graph by checking box (2), next to the table's name. You can select tables over multiple pages, in the image below shows five (5) tables yet only three (3) have been checked on the page showing. The other two (2) tables were selected from other pages.

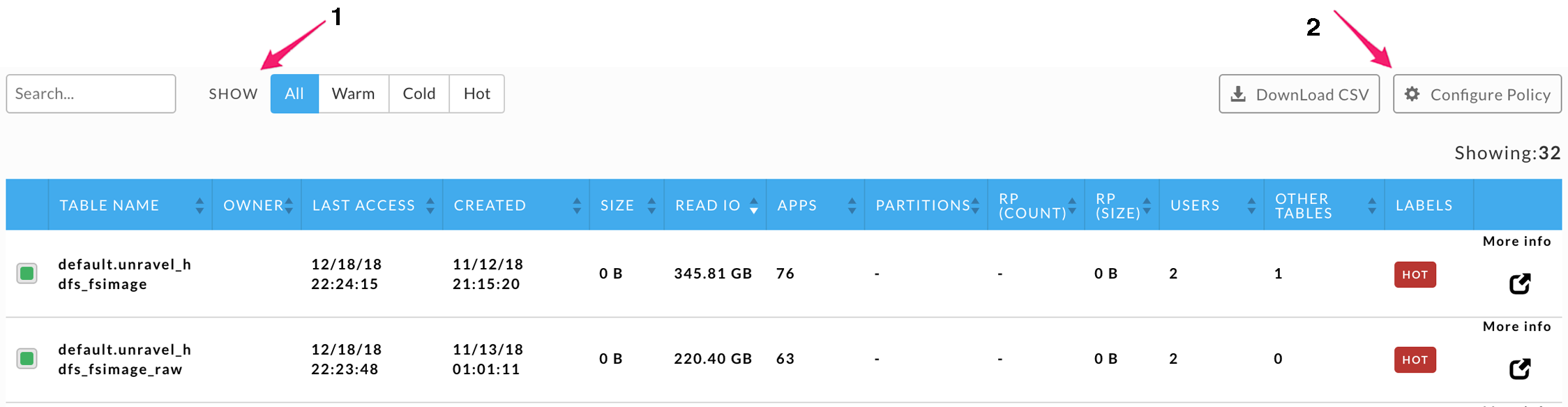

You can Search by string; any table matching or containing the specified name/string is displayed. Use Show (1) to specify the label type to use for displaying the tables. All is selected below, so every table is shown. You can sort the list by the various metrics in ascending or descending order. By default, the list is sorted on Read IO in descending order. If you have selected a table, the More Info glyph is available. Click on it to display the Table Detail pane. Click on Configuration Policy (2) to edit the label rules or Download CSV to download the table (2).

This view

Summarizes table usage and access metric.

Allows you to browse trends (KPIs).

Drill down into applications that used the table.

Lists both Hive and Impala queries.

The first table in the list above is used for the examples below.

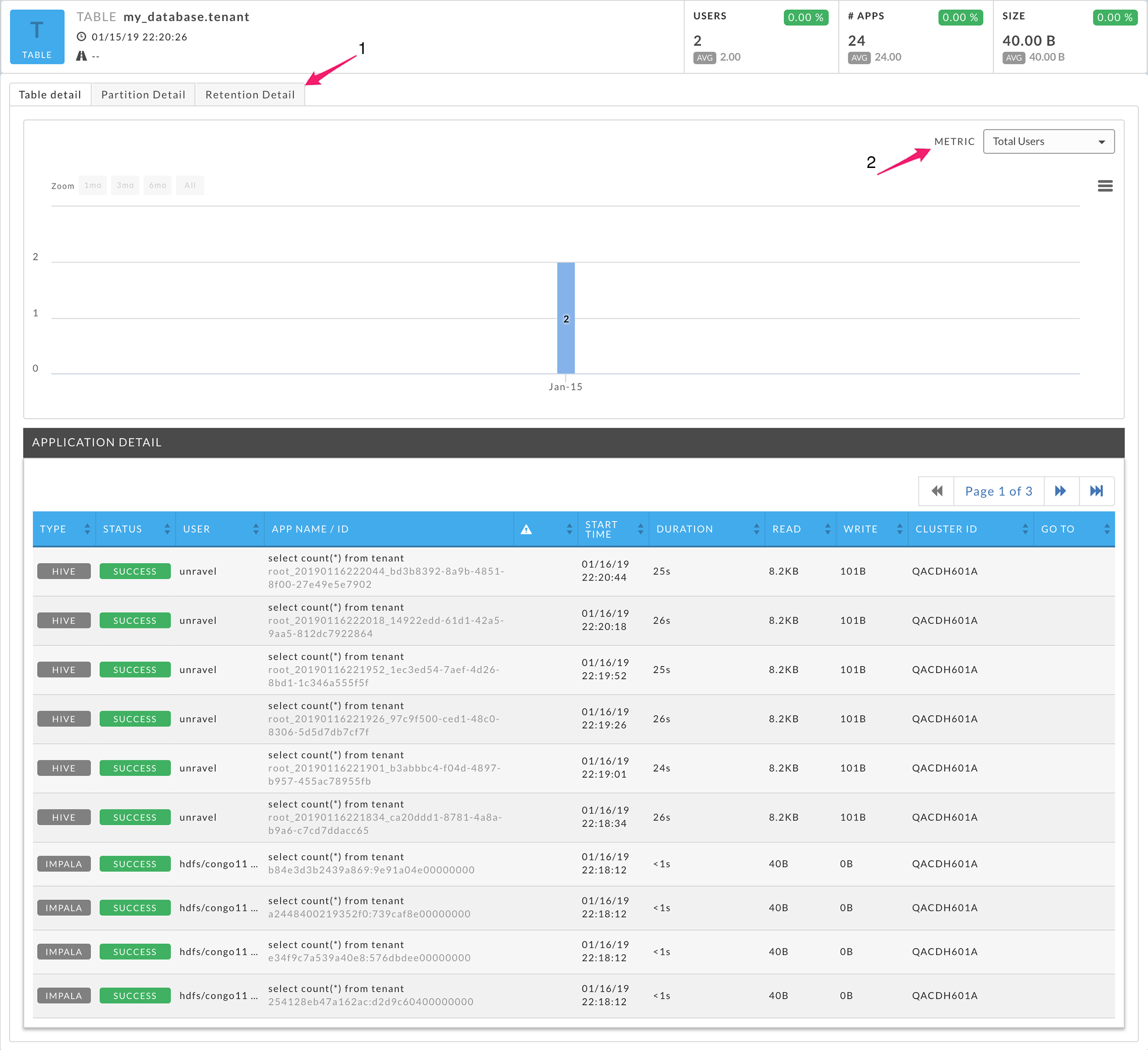

The panel's top row lists the table name, start date/time and the name/path. Hover over the name/path to display the complete path. Three (3) KPI’s are displayed: Users, # Apps, and Size.

There are three (3) tabs, Table Detail, Partition Detail, and Retention Detail (1); the default view is the Table Detail. Use the Metric (2) pull down menu to select Total Users, Total Apps, or Total Size as the metric to graph. The Application Detail lists the applications that accessed the table in the given time range. See the Application Tab section for detailed information on its format. Below the table shows both Hive and Impala queries.

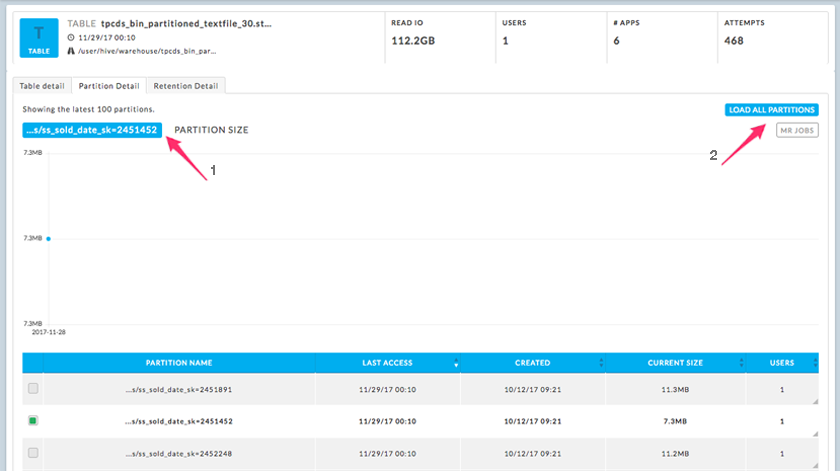

Click the Partition Detail tab for partition information.

The top left of the tab notes the number of partitions loaded, the displayed partition's name, and the view type (Partition Size or MR jobs).

By default the 100 latest partitions are loaded with the first partition listed graphed in the Partition Size view (1). To load all the partitions click on Load All Partitions (2). To switch to the MR Jobs view click on MR Jobs (2).

Chose the partition to graph by selecting the check box to the left of the partition's name. Hovering over the partition name displays the complete name/path. The partition list can be sorted on Last Access date, Created date, Current Size, or Users. Hovering over the Users number brings up the list of user(s) who accessed the partition.

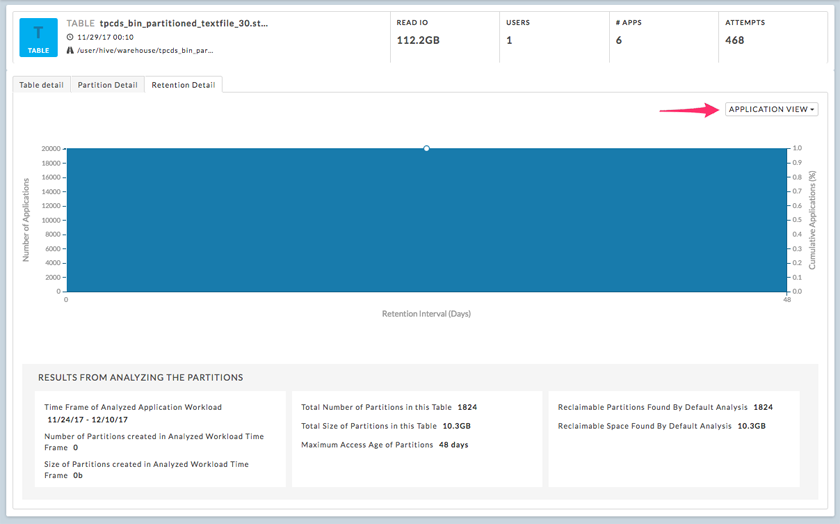

This graph initially displays the number of Applications; the pull down menu allows you to switch to the Partition Access View. Listed below the graph are the results from the partition analysis.

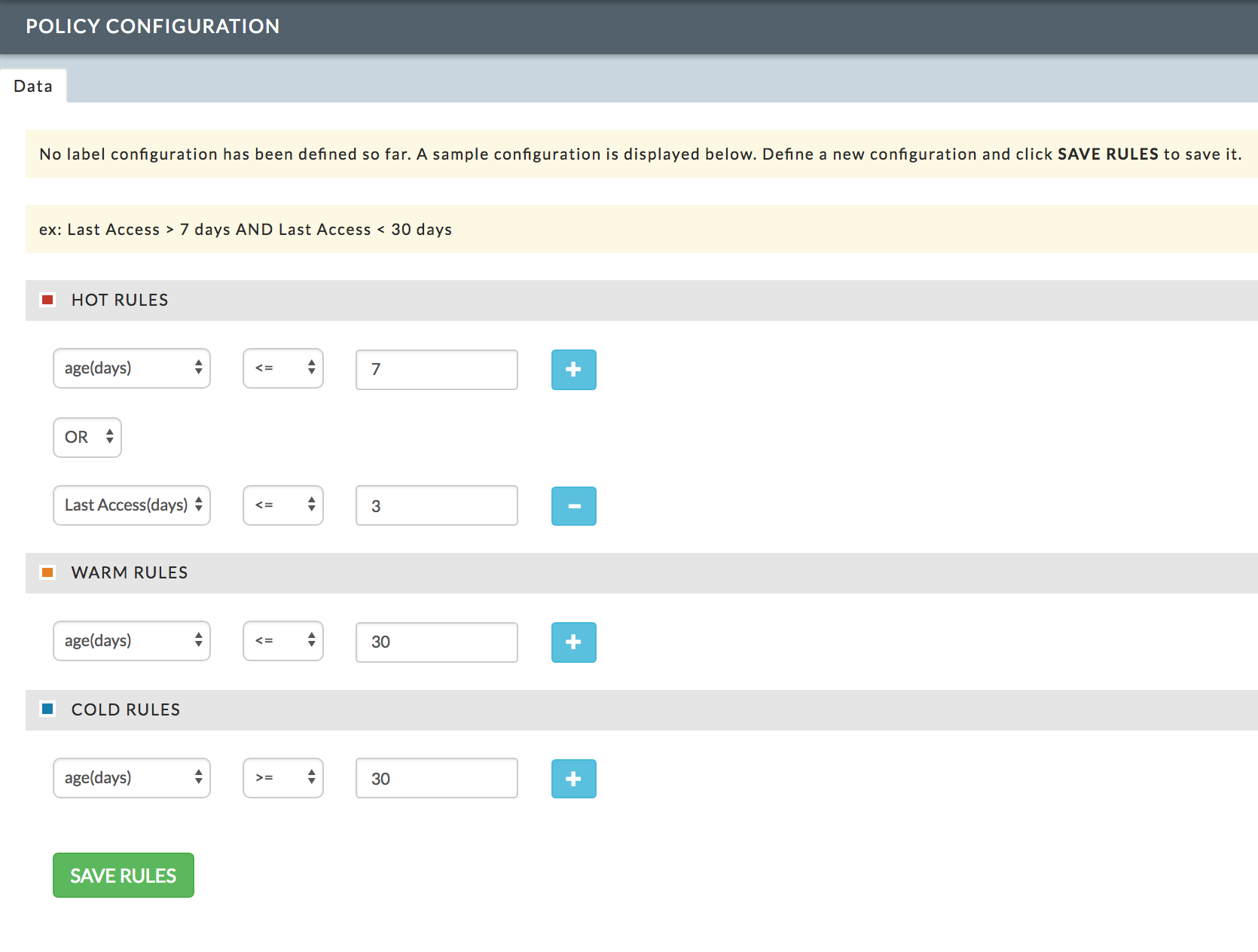

Configuration

This pane allows you to define the rules for labeling a Table/Partition either Hot, Warm, or Cold. These rules are used for the Donut chart and in the Details tab.

While the labels are immediately associated with the Tables/Partitions, the Overview Dashboard donut charts typically populate within 24 hours.

You access this modal pane from the Data > Details tab. The rules are defined per label and you can define up to two (2) rules per label. To define a rule:

From the pull down menus:

chose Age (days) or Last Access (days), and

chose the comparison operator: <= or >=.

Enter the number of days.

To add a second rule:

click on the Plus glyph,

Select the AND or OR operator from the pull down menu, and

Repeat steps 1 & 2.

To delete a second rule, click on the Minus glyph.

Click Save.

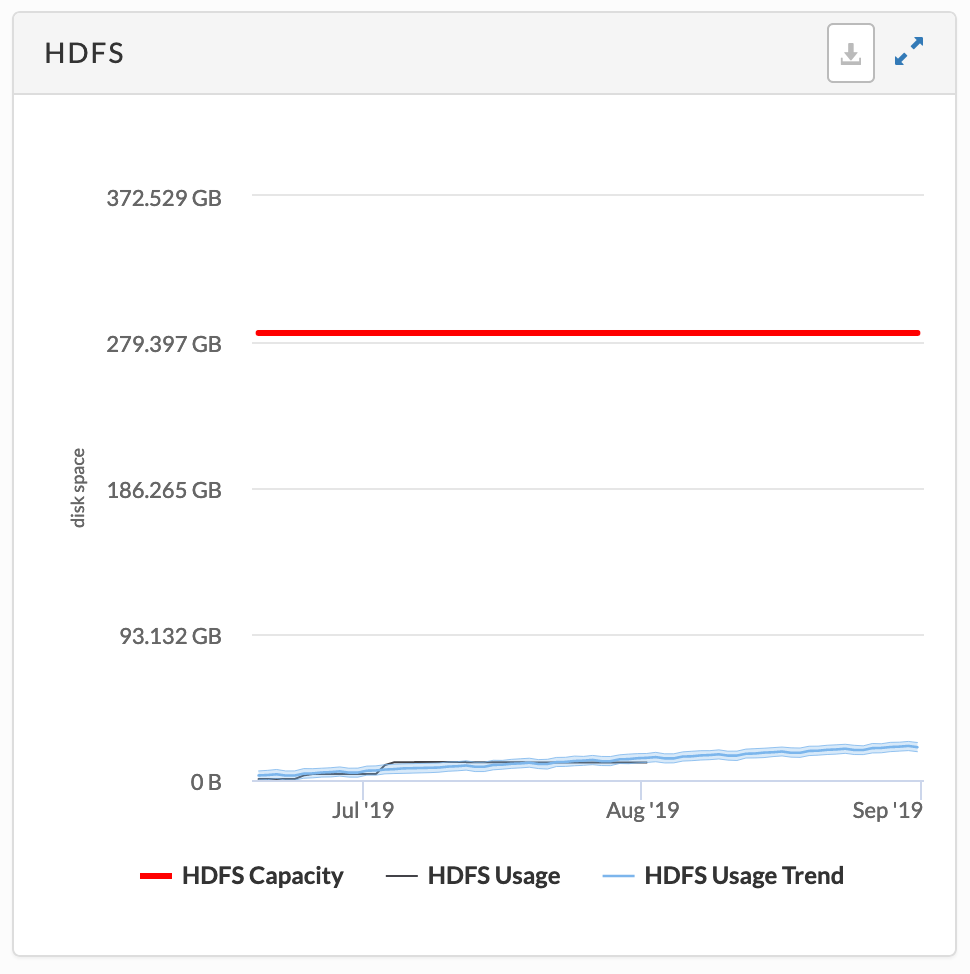

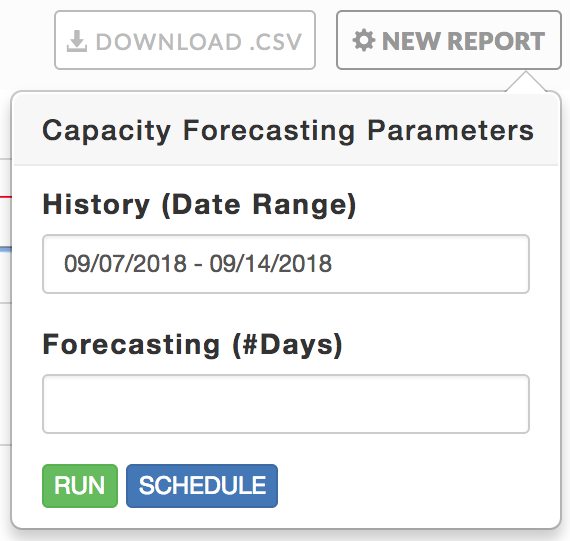

Forecasting (Disk Capacity)

Note

The OnDemand package must be installed to use this report. See here for properties which control this report.

It currently only works on Cloudera (CDH) and Hortonworks (HDP).

This report helps you monitor HDFS disk capacity usage and plan for future needs. Unravel uses your historical usage to extrapolate capacity trends allowing you to more effectively plan for, and allocate your disk resources. The tab opens displaying the last forecasting report, if any, generated. The graph displays the trend from the historical range start date to the forecast range end date (x-axis). The trend line (in blue) shows the lower, middle, and upper bounds of Unravel's prediction. The y-axis is determined by your actual physical disk capacity. The report parameters are listed above the table headings.

Click New Report to create a report. Enter the History (Date Range) to use, the default is the prior seven (7) days. Enter the Forecasting (number of days) you want to generate a forecast for. In the above example, a history of six (6) days was used to generate a five (5) day forecast. Click Run to generate the report or Schedule to generate the report on a regular basis. (See Scheduling Reports.)

|

While Unravel prepares to generate the report Run is replaced with Running and a countdown appears above it. Once Unravel starts the generation the popup closes and the New Report button pulsates blue. A light green bar appears when the report was completed successfully and results are displayed. Upon failure the bar is light red and the New Report button becomes orange.

You can download the report currently displayed by clicking the Download .csv button.

All reports, whether scheduled or ad hoc, are archived. Successful reports can be viewed or downloaded from the Report Archives tab.

Small Files

Note

The OnDemand package must be installed to be able to use this report. It requires hdfs privileges and currently only works on HDP/CDH. If you can't grant hdfs privileges, you must configure these properties.

Each small file is accessed by a single mapper, therefore a large number of small files can lead to a large number of mappers. In turn, mappers are costly to run so applications using a large number of small files drive up your costs. This report helps you to identify users who create/use an excessive amount of small files.

You can use this information to take corrective action such as:

Combine multiple files into large files.

Notify, limit, or block/ users who create/use an excessive amount.

in order to:

Correct and prevent future performance degradation.

Lower your costs to run applications.

The small file window by default opens with the last report that was generated, if any. The report parameters are listed above the table headings (1), and you can search the path list by string. The list is sorted in descending order of the total number of small files in the directory. Click Download CSV to download the report as a .csv file.

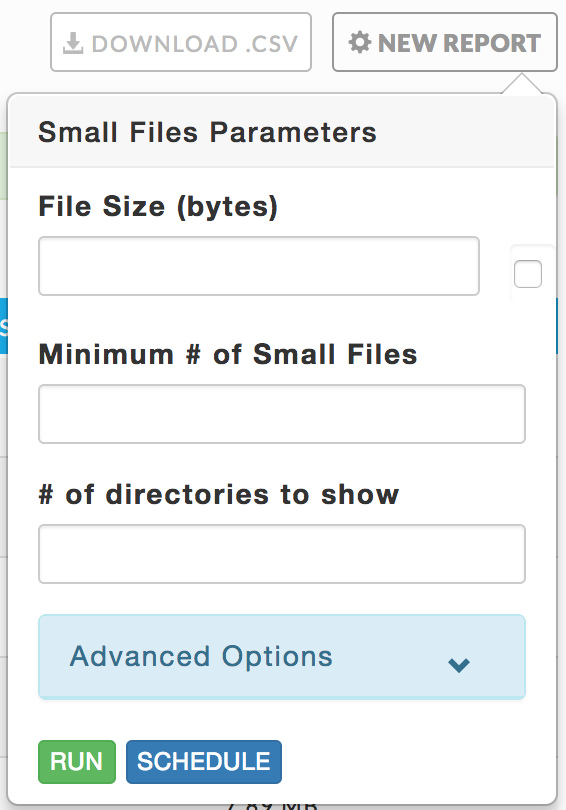

Click New Report to generate a new report. The parameters are:

Average File Size (bytes): the average small file size in a directory. Check the box to its right to enter a small file size to use instead.

Minimum # of Small Files: which are in the directory.

# of Directories to Show: is the maximum number of directories to display.

Advanced Options:

Min parent directory depth: minimum depth to start at, root + x descendants, i.e., 0=root, 1=root's children (/one), etc.

Max parent directory depth: maximum depth to end at, root + x descendants, i.e., 1=root's children (/one), 2=root's grandchildren, (/one/two), etc.

Drill down sub-directories: determines how/where the files are listed. Yes (default): lists the file in all its ancestor's list. No: list file in its directory list only.

Click Run to generate the report and Schedule to generate the report on a regular basis. See Scheduling Reports below.

|

While Unravel prepares to generate the report Run is replaced with Running and a countdown appears above it. Once Unravel starts the generation the popup closes and the New Report button pulsates blue. A light green bar appears when the report was completed successfully and results are displayed. Upon failure the bar is light red and the New Report button becomes orange.

Click Download .csv to download the current report being displayed.

All reports, whether scheduled or ad hoc, are archived. Successful reports can be viewed or downloaded from the Report Archives tab.

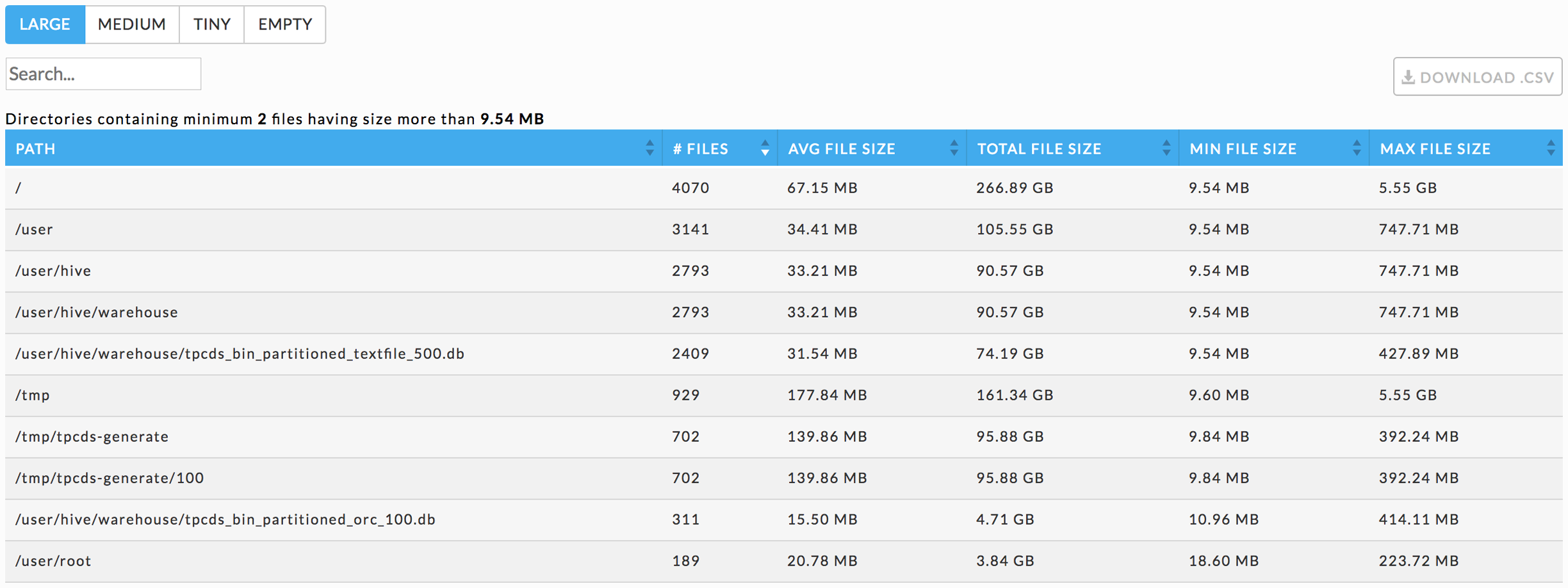

File Reports

Note

The OnDemand package must be installed to use this report. It requires HDFS privileges and currently only works on HDP/CDH. If you can't grant HDFS privileges, you must configure these properties.

This report is the same as Small Files except they are automatically generated using the File Reports properties. By default, these reports are updated every 24 hours and are not archived.

The default size for the files are:

large file is any file with more than 100 GB size,

medium file is any file with 5 GB - 10 GB size, and

tiny file is any file with less than 100 KB size.

Click on the size buttons (Large, Medium, Tiny, and Empty) to view the report. You can search by string; any directory matching or containing the string is displayed. Click Download CSV to download the report.