Snowflake GE integration

The custom Unravel Action for Great Expectations is provided as a .whl file. This must be installed in the Python environment that runs the Great Expectations test suite. When you install this package and add an Unravel action to the Great Expectations checkpoints, the details of the Expectation failure events are pushed to Unravel. It includes the following:

Expectation details

The details of the expectations set for a Data table.

Expectation result evaluations

Results of an expectation tracked over a period.

Prerequisites

An up-and-running Unravel instance.

A Great Expectations test suite. If you do not have one already set up, you can follow the setup guide from the Great Expectations team.

Unravel Action for Great Expectations.

Cluster ID (Contact Unravel support team for cluster ID.) This is an optional field and is required only in case of multi-cluster. Also, when you specify the cluster ID, you must set the property com.unraveldata.datastore.events.es.use_cluster_id to true.

A working installation of Python version 3.7.33 or 3.8.12.

Integrating Great Expectations with Unravel

The Unravel contrib package for Great Expectations, which includes the .whl file, must be installed in the Python environment where other dependencies of the Great Expectations are installed.

Set up Python environment.

Install the unravelaction from the

.whlfile provided into the Python environment that runs the GE test suite.pip install

<file_name.whl>Import the unravelaction in the file where the checkpoint is defined.

import unravelaction

Configure unravelaction.

Using an editor, open the

great_expectations.ymland specify the following parameters in theaction_listblock as shown:action_list: - name: UnravelAction action: class_name: UnravelAction module_name: unravelaction lr_urI: "http://localhost:4043'' cluster_id: "<cluster_id>"Parameters

Description

nameProvide an action name for storing validation results.

class_nameSet it to

UnravelAction.module_nameSet it to

unravelaction.lr_urlSpecify the URL to the log_reciever. Contact the Unravel Support team for more details.

cluster_idSpecify the cluster ID. Contact the Unravel Support team to get the cluster ID.

certSpecifies the path to the certificate required for server verification in case of SSL/TLS authentication.

verify_serverSpecify true or false. If false, the server verification is disabled. This is useful when a self-signed certificate is used for the LR endpoint.

username for LR endpoint authentication

Provide the user name for LR authentication. You will require this only in the case of LR endpoint authentication.

Password for LR endpoint authentication

Provide the password for LR authentication. This is required only in the case of LR endpoint authentication. The password of the LR endpoint authentication is inputted through an environment variable.

Integrating custom expectations for Unravel

If you have created custom expectations in the Expectations suite, then ensure to define the same in the Unravel contrib package. This will map the expectation appropriately to the event.

You can use the msg parameter in the unravelaction to configure a custom expectation.

msg is a Python nested dictionary that can be defined in the same file as a checkpoint. The key for the dictionary is the expectation name, and the value is a dictionary that contains different event details as keys and their corresponding value.

For example:

msg = {

"expect_column_distinct_values_to_be_in_set": {

"eventName": "TableRowQualityEvent",

"eventType": "TE",

"title": "Expectation Validation Failure",

"detail": "<ul><li>The range of values in the column is outside the specified range</li></ul>",

"actions": "<ul><li>A mismatch in the expected values in rows is usually an indicator of outliers. Review the relevant code for recent changes</li></ul>"}

} Running Great Expectations checkpoint

After integrating Great Expectations with Unravel, you can validate the data and send the expectation failures to Unravel by running a checkpoint. Refer to How to validate data by running a Checkpoint.

Viewing Great Expectations

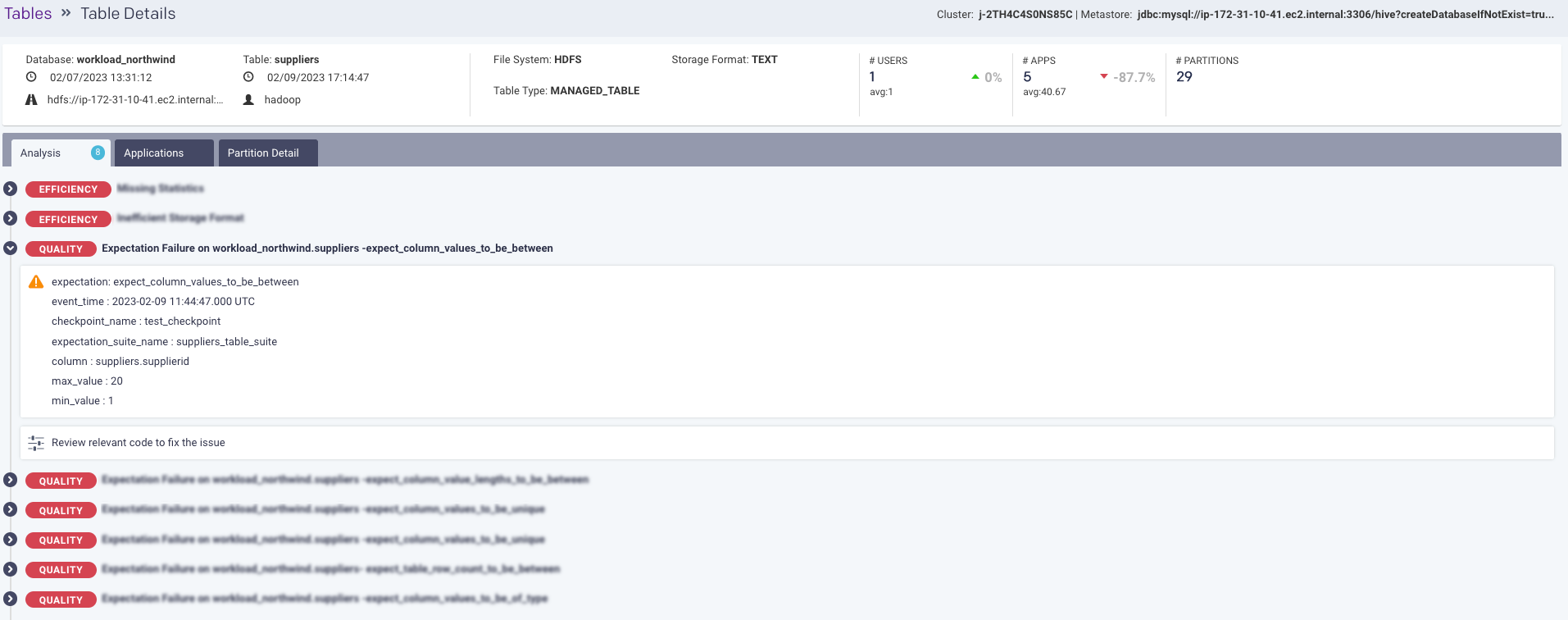

The validation results of Great Expectations are presented as Unravel Quality events. You can view these events on the Unravel UI in the corresponding table details page for the tables that have met with an expectation failure.

On the Unravel UI, go to Data > Tables.

Specify the filters for Metastore Type, Workspace, and Metastore if required.

From the table data, select a table for which the expectation suite was executed and click

. The Table details page is displayed. You can view the Great Expectations events, if any, under the Analysis tab as Quality events.

. The Table details page is displayed. You can view the Great Expectations events, if any, under the Analysis tab as Quality events.

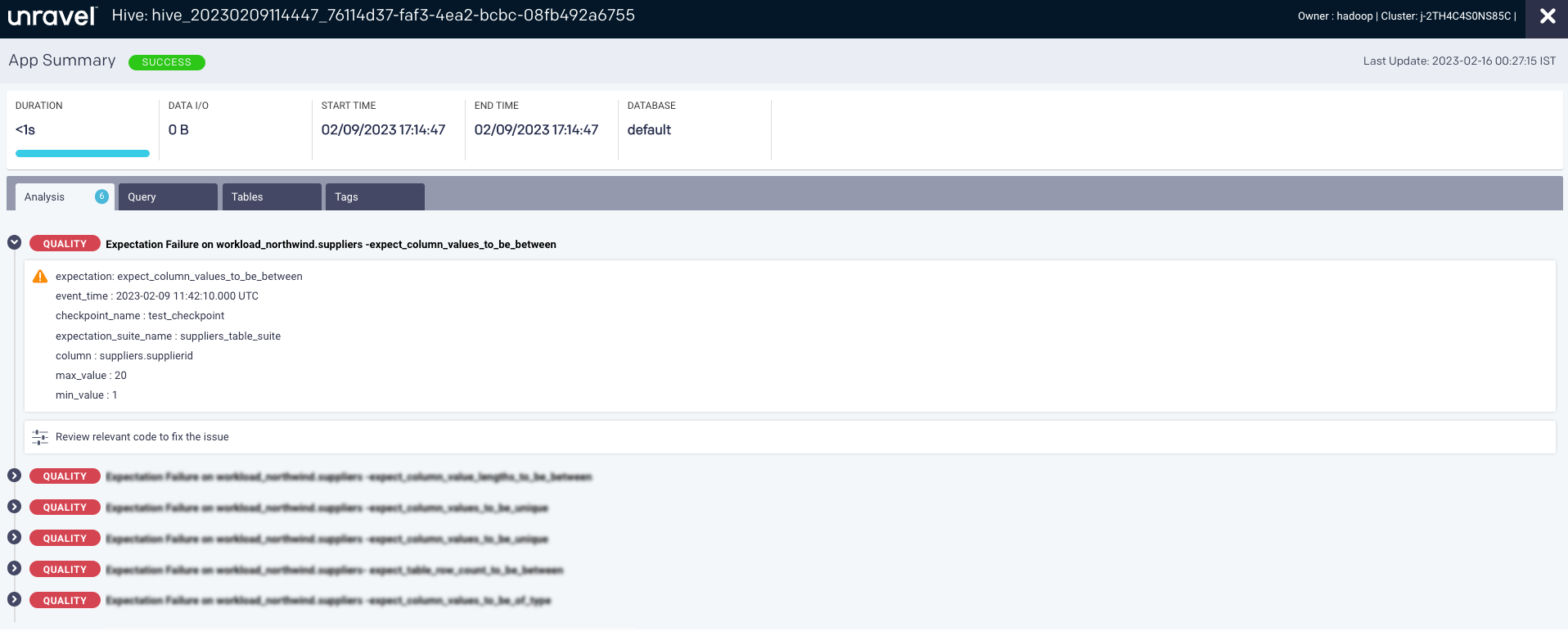

You can also access the Quality events from Jobs > Jobs details page > Analysis tab for the applications, where the expectation failures are aggregated for the tables accessed by that application.